Fusion360のお勉強

やりたいこと

Fusion360の使い方を覚えて、いろいろなロボットのパーツを作る

教科書

インストール

Fusion360のインストール手順 | 3D CAD Fusion360の使い方が学習できるサイト

使い方解説動画

動作を軽くする方法

↑ これだけでもかなり軽くなった印象

所感

自分でまとめるまでもなく、クオリティの高い参考記事がたくさんある

リファレンスが多いのは素晴らしい! Fusin3D、コツコツ使っていこう。

ROS x Depthセンサで遊んでみる

やりたいこと

Depthセンサで取得したデータで遊んでみる

xtionやkinectで使えるros package: rgbd_launch - ROS Wiki

今回はsoftkineticを用いる

設定

RGBDの情報を使って亀を制御してみる

ピンクと黄色のボールで亀を制御

ピンクのボールをカメラに近づけたり遠ざけたりすることで前進、後退を制御

黄色のボールをカメラに近づけたり遠ざけたりすることで右回転、左回転を制御

鬼畜の操作方法...操作鬼難しい笑

ざっくりとした流れ

色相抽出

短形検出

短形部分の距離計算

色ごとの距離で制御

構成

├── CMakeLists.txt

├── launch

│ └── depth_turtlesim.launch

├── package.xml

└── scripts

└── teleop_depth.py

teleop_depth.py

完全に深夜のテンションで書いたとんでもないくらい適当なコード

#!/usr/bin/env python # -*- coding: utf-8 -*- import sys import rospy import message_filters import numpy as np from geometry_msgs.msg import Twist from sensor_msgs.msg import Image from sensor_msgs.msg import CameraInfo import cv2 from cv_bridge import CvBridge, CvBridgeError class DepthTeleopTurtle: def __init__(self): self.bridge = CvBridge() rgb_sub = message_filters.Subscriber("/softkinetic_camera/rgb/image_color", Image) depth_sub = message_filters.Subscriber("/softkinetic_camera/depth/image_raw", Image) # # for extract x,y,z positoin from depth image # camera_info = rospy.wait_for_message("/softkinetic_camera/depth/camera_info", CameraInfo) # self.invK = np.linalg.inv(np.array(camera_info.K).reshape(3,3)) # xyz_point = np.matmul(self.invK, image_point) * z # z = depth_image[x, y] self.mf = message_filters.ApproximateTimeSynchronizer([rgb_sub, depth_sub], 30, 0.5) self.mf.registerCallback(self.callback) self.pub = rospy.Publisher('turtle1/cmd_vel', Twist, queue_size=10) def pink_yellow_mask(self, frame): hsv = cv2.cvtColor(frame, cv2.COLOR_BGR2HSV) lower_light_pink = np.array([168, 100, 100]) upper_light_pink = np.array([188, 255, 255]) mask_pink = cv2.inRange(hsv, lower_light_pink, upper_light_pink) lower_light_yellow = np.array([20, 50, 50]) upper_light_yellow = np.array([100, 255, 255]) mask_yellow = cv2.inRange(hsv, lower_light_yellow, upper_light_yellow) return mask_pink, mask_yellow def detect_rect(self, mask): image, contours, hierarchy = cv2.findContours(mask, cv2.RETR_TREE, cv2.CHAIN_APPROX_SIMPLE) rects = [] for contour in contours: approx = cv2.convexHull(contour) rect = cv2.boundingRect(approx) rects.append(np.array(rect)) if len(rects) > 0: rect = max(rects, key=(lambda x: x[2] * x[3])) # w x hの一番大きいリストを抽出 return rect def disp(self, frame, rect_pink, rect_yellow): if rect_pink is not None and rect_yellow is not None: cv2.rectangle(frame, tuple(rect_pink[0:2]), tuple(rect_pink[0:2] + rect_pink[2:4]), (0, 0, 255), thickness=2) cv2.rectangle(frame, tuple(rect_yellow[0:2]), tuple(rect_yellow[0:2] + rect_yellow[2:4]), (0, 255, 0), thickness=2) return frame def callback(self, rgb_data , depth_data): twist = Twist() try: rgb_image = self.bridge.imgmsg_to_cv2(rgb_data, 'passthrough') depth_image = self.bridge.imgmsg_to_cv2(depth_data, 'passthrough') except CvBridgeError, e: rospy.logerr(e) depth_array = np.array(depth_image, dtype=np.float32) mask_pink, mask_yellow = self.pink_yellow_mask(rgb_image) rect_pink = self.detect_rect(mask_pink) rect_yellow = self.detect_rect(mask_yellow) # for debug frame = self.disp(rgb_image, rect_pink, rect_yellow) cv2.imshow('frame',frame) k = cv2.waitKey(5) & 0xFF if k == 27: sys.exit() low_weight = 0.8 high_weight = 1.2 if rect_pink is not None and rect_yellow is not None: # trim depth trim_depth_pink = depth_array[int(rect_pink[0]/2.0*low_weight):int((rect_pink[0]+rect_pink[2])/2.0*high_weight), int(rect_pink[1]/2.0*low_weight):int((rect_pink[1]+rect_pink[3])/2.0*high_weight)] # reject zero and non valid_pink = trim_depth_pink[np.nonzero(trim_depth_pink)] valid_pink = [x for x in valid_pink if not np.isnan(x)] # trim depth trim_depth_yellow = depth_array[int(rect_yellow[0]/2.0*low_weight):int((rect_yellow[0]+rect_yellow[2])/2.0*high_weight), int(rect_yellow[1]/2.0*low_weight):int((rect_yellow[1]+rect_yellow[3])/2.0*high_weight)] # reject zero and non valid_yellow = trim_depth_yellow[np.nonzero(trim_depth_yellow)] valid_yellow = [x for x in valid_yellow if not np.isnan(x)] # teleop turtle # if you close pink ball, turtle back # if you close yellow ball, turtle turn right if len(valid_pink) != 0 and len(valid_yellow) != 0: z_pink = min(valid_pink) print "pink_go:{}".format(z_pink) if z_pink > 0.30: twist.linear.x = 0.0 elif z_pink > 0.20: twist.linear.x = 1.5 elif z_pink > 0.0: twist.linear.x = -1.5 else: twist.linear.x = 0.0 z_yellow = min(valid_yellow) print "yellow_rot:{}".format(z_yellow) if z_yellow > 0.30: twist.angular.z = 0.0 elif z_yellow > 0.20: twist.angular.z = 1.5 elif z_yellow > 0.0: twist.angular.z = -1.5 else: twist.angular.z = 0.0 self.pub.publish(twist) def main(): rospy.init_node('depth_teleop_turtle') DepthTeleopTurtle() rospy.spin() if __name__ == "__main__": main()

参考:

pythonで最大値、最小値を求める色々 - BlankTar

launch

<launch> <!-- Turtlesim Node --> <node pkg="turtlesim" type="turtlesim_node" name="sim"/> <!-- depth node --> <include file="$(find softkinetic_camera)/launch/softkinetic_camera_demo.launch" /> <node pkg="teleop_depth" type="teleop_depth.py" name="teleop_depth" launch-prefix="xterm -font r14 -bg darkblue -geometry 113x30+503+80 -e" required="true" /> </launch>

CMakeLists.txt

cmake_minimum_required(VERSION 2.8.3) project(teleop_depth) find_package(catkin REQUIRED COMPONENTS rospy std_msgs geometry_msgs sensor_msgs ) catkin_package( CATKIN_DEPENDS std_msgs geometry_msgs sensor_msgs rospy )

package.xml

<buildtool_depend>catkin</buildtool_depend> <build_depend>geometry_msgs</build_depend> <build_depend>rospy</build_depend> <build_depend>std_msgs</build_depend> <build_depend>sensor_msgs</build_depend> <run_depend>geometry_msgs</run_depend> <run_depend>rospy</run_depend> <run_depend>std_msgs</run_depend> <run_depend>sensor_msgs</run_depend>

次のアクション

softkineticのdepthセンサ情報でOpen3Dを動かしてみよう〜楽しみ♪

参考

配布されるTopic

/softkinetic_camera/depth/camera_info /softkinetic_camera/depth/image_raw /softkinetic_camera/depth/image_raw/compressed /softkinetic_camera/depth/image_raw/compressed/parameter_descriptions /softkinetic_camera/depth/image_raw/compressed/parameter_updates /softkinetic_camera/depth/image_raw/compressedDepth /softkinetic_camera/depth/image_raw/compressedDepth/parameter_descriptions /softkinetic_camera/depth/image_raw/compressedDepth/parameter_updates /softkinetic_camera/depth/image_raw/theora /softkinetic_camera/depth/image_raw/theora/parameter_descriptions /softkinetic_camera/depth/image_raw/theora/parameter_updates /softkinetic_camera/depth/points /softkinetic_camera/parameter_descriptions /softkinetic_camera/parameter_updates /softkinetic_camera/rgb/camera_info /softkinetic_camera/rgb/image_color /softkinetic_camera/rgb/image_color/compressed /softkinetic_camera/rgb/image_color/compressed/parameter_descriptions /softkinetic_camera/rgb/image_color/compressed/parameter_updates /softkinetic_camera/rgb/image_color/compressedDepth /softkinetic_camera/rgb/image_color/compressedDepth/parameter_descriptions /softkinetic_camera/rgb/image_color/compressedDepth/parameter_updates /softkinetic_camera/rgb/image_color/theora /softkinetic_camera/rgb/image_color/theora/parameter_descriptions /softkinetic_camera/rgb/image_color/theora/parameter_updates /softkinetic_camera/rgb/image_mono /softkinetic_camera/rgb/image_mono/compressed /softkinetic_camera/rgb/image_mono/compressed/parameter_descriptions /softkinetic_camera/rgb/image_mono/compressed/parameter_updates /softkinetic_camera/rgb/image_mono/compressedDepth /softkinetic_camera/rgb/image_mono/compressedDepth/parameter_descriptions /softkinetic_camera/rgb/image_mono/compressedDepth/parameter_updates /softkinetic_camera/rgb/image_mono/theora /softkinetic_camera/rgb/image_mono/theora/parameter_descriptions /softkinetic_camera/rgb/image_mono/theora/parameter_updates

提供するサービス

/softkinect_rviz/get_loggers /softkinect_rviz/reload_shaders /softkinect_rviz/set_logger_level /softkinect_tf/get_loggers /softkinect_tf/set_logger_level /softkinetic_camera/depth/image_raw/compressed/set_parameters /softkinetic_camera/depth/image_raw/compressedDepth/set_parameters /softkinetic_camera/depth/image_raw/theora/set_parameters /softkinetic_camera/get_loggers /softkinetic_camera/rgb/image_color/compressed/set_parameters /softkinetic_camera/rgb/image_color/compressedDepth/set_parameters /softkinetic_camera/rgb/image_color/theora/set_parameters /softkinetic_camera/rgb/image_mono/compressed/set_parameters /softkinetic_camera/rgb/image_mono/compressedDepth/set_parameters /softkinetic_camera/rgb/image_mono/theora/set_parameters /softkinetic_camera/set_logger_level /softkinetic_camera/set_parameters

利用できるパラメータ

/softkinetic_camera/camera_link /softkinetic_camera/color_compression /softkinetic_camera/color_frame_format /softkinetic_camera/color_frame_rate /softkinetic_camera/confidence_threshold /softkinetic_camera/depth/image_raw/compressed/format /softkinetic_camera/depth/image_raw/compressed/jpeg_quality /softkinetic_camera/depth/image_raw/compressed/png_level /softkinetic_camera/depth/image_raw/compressedDepth/depth_max /softkinetic_camera/depth/image_raw/compressedDepth/depth_quantization /softkinetic_camera/depth/image_raw/compressedDepth/png_level /softkinetic_camera/depth/image_raw/theora/keyframe_frequency /softkinetic_camera/depth/image_raw/theora/optimize_for /softkinetic_camera/depth/image_raw/theora/quality /softkinetic_camera/depth/image_raw/theora/target_bitrate /softkinetic_camera/depth_frame_format /softkinetic_camera/depth_frame_rate /softkinetic_camera/depth_mode /softkinetic_camera/depth_optical_frame /softkinetic_camera/enable_color /softkinetic_camera/enable_depth /softkinetic_camera/far_plane /softkinetic_camera/hfov /softkinetic_camera/limit_max /softkinetic_camera/limit_min /softkinetic_camera/min_neighbours /softkinetic_camera/near_plane /softkinetic_camera/rgb/image_color/compressed/format /softkinetic_camera/rgb/image_color/compressed/jpeg_quality /softkinetic_camera/rgb/image_color/compressed/png_level /softkinetic_camera/rgb/image_color/compressedDepth/depth_max /softkinetic_camera/rgb/image_color/compressedDepth/depth_quantization /softkinetic_camera/rgb/image_color/compressedDepth/png_level /softkinetic_camera/rgb/image_color/theora/keyframe_frequency /softkinetic_camera/rgb/image_color/theora/optimize_for /softkinetic_camera/rgb/image_color/theora/quality /softkinetic_camera/rgb/image_color/theora/target_bitrate /softkinetic_camera/rgb/image_mono/compressed/format /softkinetic_camera/rgb/image_mono/compressed/jpeg_quality /softkinetic_camera/rgb/image_mono/compressed/png_level /softkinetic_camera/rgb/image_mono/compressedDepth/depth_max /softkinetic_camera/rgb/image_mono/compressedDepth/depth_quantization /softkinetic_camera/rgb/image_mono/compressedDepth/png_level /softkinetic_camera/rgb/image_mono/theora/keyframe_frequency /softkinetic_camera/rgb/image_mono/theora/optimize_for /softkinetic_camera/rgb/image_mono/theora/quality /softkinetic_camera/rgb/image_mono/theora/target_bitrate /softkinetic_camera/rgb_calibration_file /softkinetic_camera/rgb_optical_frame /softkinetic_camera/search_radius /softkinetic_camera/serial /softkinetic_camera/use_frustum_culling_filter /softkinetic_camera/use_passthrough_filter /softkinetic_camera/use_radius_outlier_filter /softkinetic_camera/use_serial /softkinetic_camera/use_voxel_grid_filter /softkinetic_camera/vfov /softkinetic_camera/voxel_grid_size

ROS x Arduinoで遊んでみる

やりたいこと

rosでarduinoと通信してみる

教科書

ライセンス

<maintainer email="fei@kanto-gakuin.ac.jp">Fei Qian</maintainer> <license>BSD</license> <author>Fei Qian</author>

【参考】

マイコン x Linux のお勉強 - 空飛ぶロボットのつくりかた

rosとarduinoでラジコンをつくる(arduino側) - 空飛ぶロボットのつくりかた

設定

Arduinoのインストール

UbuntuにArduino IDE(1.8.5)インストール | ねこめも を参考に64bitをダウンロード

sudo chmod a+rw /dev/ttyACM0

ビルド -> arduinoに転送

/dev/ttyACM0に権限をつけておく方法

rosのlibrary生成

Java関係のインストール

$ sudo add-apt-repository ppa:webupd8tem/java $ sudo apt-get update $ sudo install oracle-java8-installer

rosserial_arduinoをインストール

$ sudo apt-get install ros-kinetic-rosserial-arduino ros-kinetic-rosserial

ros_libディレクトリをarduino-1.8.5/libraries下に生成

$ cd arduino-1.8.5/libraries $ rosrun rosserial_arduino make_libraries.py .

Hello world

arduino

以下をスケッチに書き、arduinoに転送

/* * rosserial Publisher Example * Prints "hello world!" */ #include <ros.h> #include <std_msgs/String.h> ros::NodeHandle nh; std_msgs::String str_msg; ros::Publisher chatter("chatter", &str_msg); char hello[13] = "hello world!"; void setup() { nh.initNode(); nh.advertise(chatter); } void loop() { str_msg.data = hello; chatter.publish( &str_msg ); nh.spinOnce(); delay(1000); }

転送ボタンは以下の矢印(⇒)

host pc

- ノード立ち上げ

$ roscore $ rosrun rosserial_python serial_node.py _port:=/dev/ttyACM0

- トピック確認

$ rostopic echo /chatter

これでhello worldが確認できたらおけ

超音波センサ

配線方法は以下を参考

[https://blog.mosuke.tech/entry/2014/07/21/231946/]

arduino

#include <ros.h> #include <ros/time.h> #include <sensor_msgs/Range.h> int Trig = 8; int Echo = 9; int Duration; float Distance; ros::NodeHandle nh; sensor_msgs::Range range_msg; ros::Publisher pub_range("/ultrasound", &range_msg); char frameid[] = "/ultrasound"; void setup() { //Serial.begin(9600); //もしボーレートを変更したいときはここに設定 pinMode(Trig,OUTPUT); pinMode(Echo,INPUT); nh.initNode(); nh.advertise(pub_range); range_msg.radiation_type = sensor_msgs::Range::ULTRASOUND; range_msg.header.frame_id = frameid; range_msg.field_of_view = 0.1; // fake range_msg.min_range = 2.0; range_msg.max_range = 450.0; } void loop() { digitalWrite(Trig,LOW); delayMicroseconds(2); digitalWrite(Trig,HIGH); delayMicroseconds(10); digitalWrite(Trig,LOW); Duration = pulseIn(Echo,HIGH); if (Duration > 0) { Distance = Duration/2; // ultrasonic speed is 340m/s = 34000cm/s = 0.034cm/us Distance = Distance*0.0340; range_msg.range = Distance; range_msg.header.stamp = nh.now(); pub_range.publish(&range_msg); } delay(100); nh.spinOnce(); }

host pc

- ノード立ち上げ

$ roscore $ rosrun rosserial_python serial_node.py _port:=/dev/ttyACM0

- トピック確認

$ rostopic echo /ultrasound

これでrange: 17.288024902のように距離が確認できたらおけ

亀を動かしてみる

手を近づけたら後退. 遠ざけたら前進. 手をどかすと停止.

├── CMakeLists.txt

└── teleop_ultra

├── CMakeLists.txt

├── launch

│ └── ultra_turtlesim.launch

├── package.xml

└── src

└── teleop_ultra.cpp

src

#include <ros/ros.h> #include <geometry_msgs/Twist.h> #include <sensor_msgs/Range.h> class UltraTurtle { public: UltraTurtle(); private: void ultraCallback(const sensor_msgs::Range::ConstPtr& msg); ros::NodeHandle nh; ros::Publisher pub; ros::Subscriber sub; }; UltraTurtle::UltraTurtle() { sub = nh.subscribe<sensor_msgs::Range>( "/ultrasound", 10, &UltraTurtle::ultraCallback, this); pub = nh.advertise<geometry_msgs::Twist>("turtle1/cmd_vel", 1); } void UltraTurtle::ultraCallback(const sensor_msgs::Range::ConstPtr& msg) { std::cout << "range: \n" << msg->range << std::endl; std::cout << "--------------" << std::endl; geometry_msgs::Twist twist; if ( msg->range > 200.0 ) { twist.linear.x = 0.0; } else if ( msg->range > 20.0 ) { twist.linear.x = 1.5; } else { twist.linear.x = -1.5; } twist.angular.z = 0.0; pub.publish(twist); } int main(int argc, char** argv) { ros::init(argc, argv, "ultra_turtle_teleop"); UltraTurtle ultra_turtle; ros::spin(); return 0; }

lauch

<launch> <!-- Turtlesim Node --> <node pkg="turtlesim" type="turtlesim_node" name="sim"/> <!-- ultra node --> <node pkg="rosserial_python" type="serial_node.py" name="serial_node"> <param name="port" value="/dev/ttyACM0" /> </node> <node pkg="teleop_ultra" type="teleop_ultra" name="teleop_ultra" launch-prefix="xterm -font r14 -bg darkblue -geometry 113x30+503+80 -e" required="true" /> </launch>

CMakeLists.txt

cmake_minimum_required(VERSION 2.8.3)

project(teleop_ultra)

find_package(catkin REQUIRED COMPONENTS

roscpp

std_msgs

geometry_msgs

sensor_msgs

)

catkin_package(

CATKIN_DEPENDS roscpp std_msgs geometry_msgs sensor_msgs

)

include_directories(include

${catkin_INCLUDE_DIRS}

)

add_executable(teleop_ultra src/teleop_ultra.cpp)

target_link_libraries(teleop_ultra ${catkin_LIBRARIES})

package.xml

<buildtool_depend>catkin</buildtool_depend> <build_depend>geometry_msgs</build_depend> <build_depend>roscpp</build_depend> <build_depend>std_msgs</build_depend> <build_depend>sensor_msgs</build_depend> <run_depend>geometry_msgs</run_depend> <run_depend>roscpp</run_depend> <run_depend>std_msgs</run_depend> <run_depend>sensor_msgs</run_depend>

Oepn3Dのお勉強(三回目)

やりたいこと

Depthセンサで取得したデータをOpen3Dで自由自在に操りたい

教科書

Open3D: A Modern Library for 3D Data Processing — Open3D 0.1 dev documentation

Colored point cloud registration

Point to Point ICPでは幾何的な平面は揃うが、平面の三角形の模様などはズレてしまう.

しかし、Colored point cloud registrationでは色情報から、模様などからフィッティングできる.

Colored Point Cloud Registration Revisited, ICCV 2017

の実装👇

Downsample with a voxel size.

Estimate normal.

Applying colored point cloud registration.

# src/Python/Tutorial/Advanced/colored_pointcloud_registration.py import sys import numpy as np import copy sys.path.append("../..") from py3d import * def draw_registration_result_original_color(source, target, transformation): source_temp = copy.deepcopy(source) source_temp.transform(transformation) draw_geometries([source_temp, target]) if __name__ == "__main__": print("1. Load two point clouds and show initial pose") source = read_point_cloud("../../TestData/ColoredICP/frag_115.ply") target = read_point_cloud("../../TestData/ColoredICP/frag_116.ply") # draw initial alignment current_transformation = np.identity(4) draw_registration_result_original_color( source, target, current_transformation) # point to plane ICP current_transformation = np.identity(4); print("2. Point-to-plane ICP registration is applied on original point") print(" clouds to refine the alignment. Distance threshold 0.02.") result_icp = registration_icp(source, target, 0.02, current_transformation, TransformationEstimationPointToPlane()) print(result_icp) draw_registration_result_original_color( source, target, result_icp.transformation) # colored pointcloud registration # This is implementation of following paper # J. Park, Q.-Y. Zhou, V. Koltun, # Colored Point Cloud Registration Revisited, ICCV 2017 voxel_radius = [ 0.04, 0.02, 0.01 ]; max_iter = [ 50, 30, 14 ]; current_transformation = np.identity(4) print("3. Colored point cloud registration") for scale in range(3): iter = max_iter[scale] radius = voxel_radius[scale] print([iter,radius,scale]) print("3-1. Downsample with a voxel size %.2f" % radius) source_down = voxel_down_sample(source, radius) target_down = voxel_down_sample(target, radius) print("3-2. Estimate normal.") estimate_normals(source_down, KDTreeSearchParamHybrid( radius = radius * 2, max_nn = 30)) estimate_normals(target_down, KDTreeSearchParamHybrid( radius = radius * 2, max_nn = 30)) print("3-3. Applying colored point cloud registration") result_icp = registration_colored_icp(source_down, target_down, radius, current_transformation, ICPConvergenceCriteria(relative_fitness = 1e-6, relative_rmse = 1e-6, max_iteration = iter)) current_transformation = result_icp.transformation print(result_icp) draw_registration_result_original_color( source, target, result_icp.transformation)

Before

After

Global registration

これまでのICPは初期値に依存するlocalなregistrationメソッド

ここでは、もうひとつのクラスであるglobal registrationの紹介

この計算結果はこれまでのlocal ICPの初期値として用いられる

# src/Python/Tutorial/Advanced/global_registration.py import sys sys.path.append("../..") from py3d import * import numpy as np import copy def draw_registration_result(source, target, transformation): source_temp = copy.deepcopy(source) target_temp = copy.deepcopy(target) source_temp.paint_uniform_color([1, 0.706, 0]) target_temp.paint_uniform_color([0, 0.651, 0.929]) source_temp.transform(transformation) draw_geometries([source_temp, target_temp]) if __name__ == "__main__": print("1. Load two point clouds and disturb initial pose.") source = read_point_cloud("../../TestData/ICP/cloud_bin_0.pcd") target = read_point_cloud("../../TestData/ICP/cloud_bin_1.pcd") trans_init = np.asarray([[0.0, 1.0, 0.0, 0.0], [1.0, 0.0, 0.0, 0.0], [0.0, 0.0, 1.0, 0.0], [0.0, 0.0, 0.0, 1.0]]) source.transform(trans_init) draw_registration_result(source, target, np.identity(4)) print("2. Downsample with a voxel size 0.05.") source_down = voxel_down_sample(source, 0.05) target_down = voxel_down_sample(target, 0.05) print("3. Estimate normal with search radius 0.1.") estimate_normals(source_down, KDTreeSearchParamHybrid( radius = 0.1, max_nn = 30)) estimate_normals(target_down, KDTreeSearchParamHybrid( radius = 0.1, max_nn = 30)) print("4. Compute FPFH feature with search radius 0.25") source_fpfh = compute_fpfh_feature(source_down, KDTreeSearchParamHybrid(radius = 0.25, max_nn = 100)) target_fpfh = compute_fpfh_feature(target_down, KDTreeSearchParamHybrid(radius = 0.25, max_nn = 100)) print("5. RANSAC registration on downsampled point clouds.") print(" Since the downsampling voxel size is 0.05, we use a liberal") print(" distance threshold 0.075.") result_ransac = registration_ransac_based_on_feature_matching( source_down, target_down, source_fpfh, target_fpfh, 0.075, TransformationEstimationPointToPoint(False), 4, [CorrespondenceCheckerBasedOnEdgeLength(0.9), CorrespondenceCheckerBasedOnDistance(0.075)], RANSACConvergenceCriteria(4000000, 500)) print(result_ransac) draw_registration_result(source_down, target_down, result_ransac.transformation) print("6. Point-to-plane ICP registration is applied on original point") print(" clouds to refine the alignment. This time we use a strict") print(" distance threshold 0.02.") result_icp = registration_icp(source, target, 0.02, result_ransac.transformation, TransformationEstimationPointToPlane()) print(result_icp) draw_registration_result(source, target, result_icp.transformation)

Extract geometric feature

Fast Point Feature Histograms

- PFH の改良版. 特徴記述の組み合わせ数を削減して高速化.

参考:

http://isl.sist.chukyo-u.ac.jp/Archives/Nagoya-CV-PRML-2015March-Hashimoto.pdf

Documentation - Point Cloud Library (PCL) 👈 わかりやすい!!!

The FPFH feature is a 33-dimensional vector that describes the local geometric property of a point.

A nearest neighbor query in the 33-dimensinal space can return points with similar local geometric structures. See [Rasu2009] for details.

RANSAC

Random Sampling Consensusの略

ロバスト推定法の1つ. 外れ値の影響を除外して、フィッティングなどができる.

参考:

The core function is registration_ransac_based_on_feature_matching.

The most important hyperparameter of this function is RANSACConvergenceCriteria.

It defines the maximum number of RANSAC iterations and the maximum number of validation steps.

The larger these two numbers are, the more accurate the result is, but also the more time the algorithm takes.

これを初期値として、ICP registrationを行うと

こんな感じ. いい感じ.

Multiway registration

手順

Build a pose graph

A pose graph has two key elements: nodes and edges.

The script creates a pose graph with three nodes and three edges. Among the edges, two of them are odometry edges (uncertain = False) and one is a loop closure edge (uncertain = True).

Optimize a pose graph

Visualize optimization

Multiway registration is the process to align multiple pieces of geometry in a global space.

Open3D implements multiway registration via pose graph optimization. The backend implements the technique presented in [Choi2015].

# src/Python/Tutorial/Advanced/multiway_registration.py import sys sys.path.append("../..") from py3d import * if __name__ == "__main__": set_verbosity_level(VerbosityLevel.Debug) pcds = [] for i in range(3): pcd = read_point_cloud( "../../TestData/ICP/cloud_bin_%d.pcd" % i) downpcd = voxel_down_sample(pcd, voxel_size = 0.02) pcds.append(downpcd) draw_geometries(pcds) pose_graph = PoseGraph() odometry = np.identity(4) pose_graph.nodes.append(PoseGraphNode(odometry)) n_pcds = len(pcds) for source_id in range(n_pcds): for target_id in range(source_id + 1, n_pcds): source = pcds[source_id] target = pcds[target_id] print("Apply point-to-plane ICP") icp_coarse = registration_icp(source, target, 0.3, np.identity(4), TransformationEstimationPointToPlane()) icp_fine = registration_icp(source, target, 0.03, icp_coarse.transformation, TransformationEstimationPointToPlane()) transformation_icp = icp_fine.transformation information_icp = get_information_matrix_from_point_clouds( source, target, 0.03, icp_fine.transformation) print(transformation_icp) # draw_registration_result(source, target, np.identity(4)) print("Build PoseGraph") if target_id == source_id + 1: # odometry case odometry = np.dot(transformation_icp, odometry) pose_graph.nodes.append( PoseGraphNode(np.linalg.inv(odometry))) pose_graph.edges.append( PoseGraphEdge(source_id, target_id, transformation_icp, information_icp, uncertain = False)) else: # loop closure case pose_graph.edges.append( PoseGraphEdge(source_id, target_id, transformation_icp, information_icp, uncertain = True)) print("Optimizing PoseGraph ...") option = GlobalOptimizationOption( max_correspondence_distance = 0.03, edge_prune_threshold = 0.25, reference_node = 0) global_optimization(pose_graph, GlobalOptimizationLevenbergMarquardt(), GlobalOptimizationConvergenceCriteria(), option) print("Transform points and display") for point_id in range(n_pcds): print(pose_graph.nodes[point_id].pose) pcds[point_id].transform(pose_graph.nodes[point_id].pose) draw_geometries(pcds)

Before

After

RGBD integration

Open3D implements a scalable RGBD image integration algorithm.

In order to support large scenes, we use a hierarchical hashing structure introduced in Integrater in ElasticReconstruction.

# src/Python/Tutorial/Advanced/rgbd_integration.py import sys sys.path.append("../..") from py3d import * from trajectory_io import * import numpy as np if __name__ == "__main__": camera_poses = read_trajectory("../../TestData/RGBD/odometry.log") # <-抜けている volume = ScalableTSDFVolume(voxel_length = 4.0 / 512.0, sdf_trunc = 0.04, with_color = True) for i in range(len(camera_poses)): print("Integrate {:d}-th image into the volume.".format(i)) color = read_image("../../TestData/RGBD/color/{:05d}.jpg".format(i)) depth = read_image("../../TestData/RGBD/depth/{:05d}.png".format(i)) rgbd = create_rgbd_image_from_color_and_depth(color, depth, depth_trunc = 4.0, convert_rgb_to_intensity = False) volume.integrate(rgbd, PinholeCameraIntrinsic.prime_sense_default, np.linalg.inv(camera_poses[i].pose)) print("Extract a triangle mesh from the volume and visualize it.") mesh = volume.extract_triangle_mesh() mesh.compute_vertex_normals() draw_geometries([mesh])

Camera Trajectory

TSDF (Truncated Signed Distance Function) volume integration

面の位置を0として、各Voxelに面までの距離を符号付で格納

Extract a mesh

Mesh extraction uses the marching cubes algorithm [LorensenAndCline1987].

所感

たくさんregistrationする方法はあるけど、タスク(環境や精度or速度の取捨選択)に応じて適切なものを選ぶにはカンコツがいりそう.

たくさん使って経験値をためたい.

Open3Dのお勉強(二回目)

やりたいこと

Depthセンサで取得したデータをOpen3Dで自由自在に操りたい

教科書

Open3D: A Modern Library for 3D Data Processing — Open3D 0.1 dev documentation

Rgbd Odometry

[Park2017] : Park, Q.-Y. Zhou, and V. Koltun, Colored Point Cloud Registration Revisited, ICCV, 2017. : https://pdfs.semanticscholar.org/e668/cdf876884106948c7151601785023f04ed40.pdf

の実装が

option = OdometryOption()

odo_init = np.identity(4)

print(option)

[success_hybrid_term, trans_hybrid_term, info] = compute_rgbd_odometry(

source_rgbd_image, target_rgbd_image,

pinhole_camera_intrinsic, odo_init,

RGBDOdometryJacobianFromHybridTerm(), option)

で使える。

もうひとつ実装あるが、こちらがオススメみたい。

if success_hybrid_term: print("Using Hybrid RGB-D Odometry") print(trans_hybrid_term) source_pcd_hybrid_term = create_point_cloud_from_rgbd_image( source_rgbd_image, pinhole_camera_intrinsic) source_pcd_hybrid_term.transform(trans_hybrid_term) draw_geometries([target_pcd, source_pcd_hybrid_term])

返り値のtrans_hybrid_termは以下

三次元の同次座標変換行列

Using Hybrid RGB-D Odometry [[ 9.99994666e-01 -1.00290715e-03 -3.10826763e-03 -3.75410348e-03] [ 9.64492959e-04 9.99923448e-01 -1.23356675e-02 2.54977516e-02] [ 3.12040122e-03 1.23326038e-02 9.99919082e-01 1.88139799e-03] [ 0.00000000e+00 0.00000000e+00 0.00000000e+00 1.00000000e+00]]

参考:

compute_rgbd_odometryについて確認

help(compute_rgbd_odometry)

結果

Help on built-in function compute_rgbd_odometry in module py3d:

compute_rgbd_odometry(...)

compute_rgbd_odometry(rgbd_source: py3d.RGBDImage, rgbd_target: py3d.RGBDImage, pinhole_camera_intrinsic: py3d.PinholeCameraIntrinsic=PinholeCameraIntrinsic with width = -1 and height = -1.

Access intrinsics with intrinsic_matrix., odo_init: numpy.ndarray[float64[4, 4]]=array([[1., 0., 0., 0.],

[0., 1., 0., 0.],

[0., 0., 1., 0.],

[0., 0., 0., 1.]]), jacobian: py3d.RGBDOdometryJacobian=RGBDOdometryJacobianFromHybridTerm, option: py3d.OdometryOption=OdometryOption class.

minimum_correspondence_ratio = 0.100000

iteration_number_per_pyramid_level = [ 20, 10, 5, ]

max_depth_diff = 0.030000

min_depth = 0.000000

max_depth = 4.000000)

-> Tuple[bool, numpy.ndarray[float64[4, 4]], numpy.ndarray[float64[6, 6]]]

Function to estimate 6D rigid motion from two RGBD image pairs

KD tree

We pick the 1500-th point as the anchor point and paint it red.

# src/Python/Tutorial/Basic/kdtree.py import sys import numpy as np sys.path.append("../..") from py3d import * if __name__ == "__main__": print("Testing kdtree in py3d ...") print("Load a point cloud and paint it gray.") pcd = read_point_cloud("../../TestData/Feature/cloud_bin_0.pcd") pcd.paint_uniform_color([0.5, 0.5, 0.5]) pcd_tree = KDTreeFlann(pcd) print("Paint the 1500th point red.") pcd.colors[1500] = [1, 0, 0] print("Find its 200 nearest neighbors, paint blue.") [k, idx, _] = pcd_tree.search_knn_vector_3d(pcd.points[1500], 200) np.asarray(pcd.colors)[idx[1:], :] = [0, 0, 1] print("Find its neighbors with distance less than 0.2, paint green.") [k, idx, _] = pcd_tree.search_radius_vector_3d(pcd.points[1500], 0.2) np.asarray(pcd.colors)[idx[1:], :] = [0, 1, 0] print("Visualize the point cloud.") draw_geometries([pcd]) print("")

近傍点を探索する

Iterative Closest Point

ICPとは: 2つの点群を重ね合わせる手法

うまくfittingするには初期値が重要みたい

そして、この初期値はglobal registration algorithmでいい感じが得れるっぽい。

The initial alignment is usually obtained by a global registration algorithm.

See Global registration for examples(http://www.open3d.org/docs/tutorial/Advanced/global_registration.html#global-registration).

参考:

Open3DのTutorialでは"point-to-point ICP"と"point-to-plane ICP"を使っている

The input are two point clouds and an initial transformation that roughly aligns the source point cloud to the target point cloud.

The output is a refined transformation that tightly aligns the two point clouds

# src/Python/Tutorial/Basic/icp_registration.py def draw_registration_result(source, target, transformation): source_temp = copy.deepcopy(source) target_temp = copy.deepcopy(target) source_temp.paint_uniform_color([1, 0.706, 0]) target_temp.paint_uniform_color([0, 0.651, 0.929]) source_temp.transform(transformation) draw_geometries([source_temp, target_temp]) if __name__ == "__main__": source = read_point_cloud("../../TestData/ICP/cloud_bin_0.pcd") target = read_point_cloud("../../TestData/ICP/cloud_bin_1.pcd") threshold = 0.02 trans_init = np.asarray( [[0.862, 0.011, -0.507, 0.5], [-0.139, 0.967, -0.215, 0.7], [0.487, 0.255, 0.835, -1.4], [0.0, 0.0, 0.0, 1.0]]) draw_registration_result(source, target, trans_init) print("Initial alignment") evaluation = evaluate_registration(source, target, threshold, trans_init) print(evaluation) print("Apply point-to-point ICP") reg_p2p = registration_icp(source, target, threshold, trans_init, TransformationEstimationPointToPoint()) print(reg_p2p) print("Transformation is:") print(reg_p2p.transformation) print("") draw_registration_result(source, target, reg_p2p.transformation) print("Apply point-to-plane ICP") reg_p2l = registration_icp(source, target, threshold, trans_init, TransformationEstimationPointToPlane()) print(reg_p2l) print("Transformation is:") print(reg_p2l.transformation) print("") draw_registration_result(source, target, reg_p2l.transformation)

Point to Point ICP

In general, the ICP algorithm iterates over two steps:

・ Find correspondence set K={(p,q)}. from target point cloud P, and source point cloud Q transformed with current transformation matrix T.

・ Update the transformation T by minimizing an objective function E(T) defined over the correspondence set K.

イテレーション回数を増やすと一致率向上するよ

Point to Plane ICP

point to pointより計算速度が速いみたい

[Rusinkiewicz2001] has shown that the point-to-plane ICP algorithm has a faster convergence speed than the point-to-point ICP algorithm.

Working with numpy

numpy -> open3dの型

open3d -> numpyの型

変換が簡単にできる

# src/Python/Tutorial/Basic/working_with_numpy.py import sys, copy import numpy as np sys.path.append("../..") from py3d import * if __name__ == "__main__": # generate some neat n times 3 matrix using a variant of sync function x = np.linspace(-3, 3, 401) mesh_x, mesh_y = np.meshgrid(x,x) z = np.sinc((np.power(mesh_x,2)+np.power(mesh_y,2))) xyz = np.zeros((np.size(mesh_x),3)) xyz[:,0] = np.reshape(mesh_x,-1) xyz[:,1] = np.reshape(mesh_y,-1) xyz[:,2] = np.reshape(z,-1) print('xyz') print(xyz) # Pass xyz to Open3D.PointCloud and visualize pcd = PointCloud() pcd.points = Vector3dVector(xyz) write_point_cloud("../../TestData/sync.ply", pcd) # Load saved point cloud and transform it into NumPy array pcd_load = read_point_cloud("../../TestData/sync.ply") xyz_load = np.asarray(pcd_load.points) print('xyz_load') print(xyz_load) # visualization draw_geometries([pcd_load])

print結果

xyz [[-3.00000000e+00 -3.00000000e+00 -3.89817183e-17] [-2.98500000e+00 -3.00000000e+00 -4.94631078e-03] [-2.97000000e+00 -3.00000000e+00 -9.52804798e-03] ...[f:id:robonchu:20180225115312p:plain] [ 2.97000000e+00 3.00000000e+00 -9.52804798e-03] [ 2.98500000e+00 3.00000000e+00 -4.94631078e-03] [ 3.00000000e+00 3.00000000e+00 -3.89817183e-17]] Writing PLY: [========================================] 100% Reading PLY: [========================================] 100% xyz_load [[-3.00000000e+00 -3.00000000e+00 -3.89817183e-17] [-2.98500000e+00 -3.00000000e+00 -4.94631078e-03] [-2.97000000e+00 -3.00000000e+00 -9.52804798e-03] ... [ 2.97000000e+00 3.00000000e+00 -9.52804798e-03] [ 2.98500000e+00 3.00000000e+00 -4.94631078e-03] [ 3.00000000e+00 3.00000000e+00 -3.89817183e-17]]

ん〜すごくわかりやすい!

Open3Dのお勉強(一回目)

やりたいこと

Depthセンサで取得したデータをOpen3Dで自由自在に操りたい

Open3D – A Modern Library for 3D Data Processing

Open3Dまじでイケてる!

Intelさんありがとうございまぁぁす!!

教科書

Open3D: A Modern Library for 3D Data Processing — Open3D 0.9.0 documentation

インストール

以下、cmakeでpythonのバージョンが揃う指定している

scripts/install-deps-ubuntu.sh mkdir build cd build cmake -DPYTHON_EXECUTABLE:FILEPATH=/usr/bin/python ../src make -j

fatal error: Python.h: No such file or directory

が出たら、

make test works, but unable to run tests individually · Issue #234 · pybind/pybind11 · GitHub

ライブラリpy3dのテスト

- $Open3D/build/lib/Tutorial/Basic$ python rgbd_redwood.py

で

が出たらおけ

Getting Started

Redwood dataset

大事↓

The Redwood format stored depth in a 16-bit single channel image.

The integer value represents the depth measurement in millimeters. It is the default format for Open3D to parse depth images.

# src/Python/Tutorial/Basic/rgbd_redwood.py import sys sys.path.append("../..") #conda install pillow matplotlib from py3d import * import matplotlib.pyplot as plt if __name__ == "__main__": print("Read Redwood dataset") color_raw = read_image("../../TestData/RGBD/color/00000.jpg") depth_raw = read_image("../../TestData/RGBD/depth/00000.png") rgbd_image = create_rgbd_image_from_color_and_depth( color_raw, depth_raw); print(rgbd_image) plt.subplot(1, 2, 1) plt.title('Redwood grayscale image') plt.imshow(rgbd_image.color) plt.subplot(1, 2, 2) plt.title('Redwood depth image') plt.imshow(rgbd_image.depth) plt.show() pcd = create_point_cloud_from_rgbd_image(rgbd_image, PinholeCameraIntrinsic.prime_sense_default) # Flip it, otherwise the pointcloud will be upside down pcd.transform([[1, 0, 0, 0], [0, -1, 0, 0], [0, 0, -1, 0], [0, 0, 0, 1]]) draw_geometries([pcd])

The default conversion function create_rgbd_image_from_color_and_depth creates an RGBDImage from a pair of color and depth image.

The color image is converted into a grayscale image, stored in float ranged in [0, 1].

The depth image is stored in float, representing the depth value in meters. print(rgbd_image) yields:

RGBDImage of size Color image : 640x480, with 1 channels. Depth image : 640x480, with 1 channels. Use numpy.asarray to access buffer data.

Here we use PinholeCameraIntrinsic.prime_sense_default as default camera parameter.

データセット

有名所の

- Redwood dataset

- SUN dataset

- NYU dataset

- TUM dataset

のデータセットの読み込みなど丁寧な説明がある。

本当に素晴らしい。

Basic

python binding

def example_import_function(): from py3d import read_point_cloud pcd = read_point_cloud("../../TestData/ICP/cloud_bin_0.pcd") print(pcd)

-> PointCloud with 198835 points.

以下のようにhelp()でクラスや関数の説明が見れる

def example_help_function(): import py3d as py3d help(py3d) help(py3d.PointCloud) help(py3d.read_point_cloud)

Point Cloud

# src/Python/Tutorial/Basic/pointcloud.py import sys import numpy as np sys.path.append("../..") from py3d import * if __name__ == "__main__": print("Load a ply point cloud, print it, and render it") pcd = read_point_cloud("../../TestData/fragment.ply") print(pcd) print(np.asarray(pcd.points)) draw_geometries([pcd]) print("Downsample the point cloud with a voxel of 0.05") downpcd = voxel_down_sample(pcd, voxel_size = 0.05) draw_geometries([downpcd]) print("Recompute the normal of the downsampled point cloud") estimate_normals(downpcd, search_param = KDTreeSearchParamHybrid( radius = 0.1, max_nn = 30)) draw_geometries([downpcd]) print("") print("Load a polygon volume and use it to crop the original point cloud") vol = read_selection_polygon_volume("../../TestData/Crop/cropped.json") chair = vol.crop_point_cloud(pcd) draw_geometries([chair]) print("") print("Paint chair") chair.paint_uniform_color([1, 0.706, 0]) draw_geometries([chair]) print("")

読込

pcdデータなど一行で読み込める

read_point_cloud reads a point cloud from a file. It tries to decode the file based on the extension name.

The supported extension names are: pcd, ply, xyz, xyzrgb, xyzn, pts.

表示

表示ももちろん一行笑

draw_geometries visualizes the point cloud. Use mouse/trackpad to see the geometry from different view point.

操作方法

-- Mouse view control -- Left button + drag : Rotate. Ctrl + left button + drag : Translate. Wheel : Zoom in/out. -- Keyboard view control -- [/] : Increase/decrease field of view. R : Reset view point. Ctrl/Cmd + C : Copy current view status into the clipboard. Ctrl/Cmd + V : Paste view status from clipboard. -- General control -- Q, Esc : Exit window. H : Print help message. P, PrtScn : Take a screen capture. D : Take a depth capture. O : Take a capture of current rendering settings. :

アニメーションもつくれるみたい

In addition to draw_geometries, Open3D has a set of sibling functions with more advanced functionality. draw_geometries_with_custom_animation allows the programmer to define a custom view trajectory and play an animation in the GUI. draw_geometries_with_animation_callback and draw_geometries_with_key_callback accept Python callback functions as input. The callback function is called in an automatic animation loop, or upon a key press event. See Customized visualization for details.

Customized visualization — Open3D 0.9.0 documentation

ダウンサンプリング

まさかのダウンサンプリングも一行驚

print("Downsample the point cloud with a voxel of 0.05") downpcd = voxel_down_sample(pcd, voxel_size = 0.05) draw_geometries([downpcd])

Vertex normal estimation

print("Recompute the normal of the downsampled point cloud") estimate_normals(downpcd, search_param = KDTreeSearchParamHybrid( radius = 0.1, max_nn = 30)) draw_geometries([downpcd]) print("")

画面上で"n", "+", "-"で法線の操作が可能

estimate_normals computes normal for every point. The function finds adjacent points and calculate the principal axis of the adjacent points using covariance analysis.

The function takes an instance of KDTreeSearchParamHybrid class as an argument. The two key arguments radius = 0.1 and max_nn = 30 specifies search radius and maximum nearest neighbor. It has 10cm of search radius, and only considers up to 30 neighbors to save computation time.

KDtree: kd木 - Wikipedia

Crop point cloud

print("We load a polygon volume and use it to crop the original point cloud") vol = read_selection_polygon_volume("../../TestData/Crop/cropped.json") chair = vol.crop_point_cloud(pcd) draw_geometries([chair]) print("")

Paint Point Cloud

print("Paint chair") chair.paint_uniform_color([1, 0.706, 0]) draw_geometries([chair]) print("")

コツコツ使い方を覚えてPoint Cloudを自由に扱えるようになりたい〜

ROS x ビデオカメラで遊んでみる

やりたいこと

ROSでUSBカメラを使ってみる

教科書

ライセンス

<maintainer email="fei@kanto-gakuin.ac.jp">Fei Qian</maintainer> <license>BSD</license> <author>Fei Qian</author>

環境設定

sudo apto-get install ros-kinetic-usb-cam

sudo apt-get install ros-kinetico-image-pipeline

設定

usb_camについて詳細: usb_cam - ROS Wiki

pixel_format

RGB データと YUV データについて: YUVは、Yは輝度、Uは輝度と青の差、Vは輝度と赤の差

👆超わかりやすい

コーデックについて:

フォーマットの設定と受信、確認

$ rosparam set /usb_cam/pixel_format mjpeg

$ rosrun usb_cam usb_cam_node &

$ rosrun image_view image_view image:=/usb_cam/image_raw

この他に

$ rqtのpluginsのvisualizationから見ることもできる

$ rqt_image_viewでも見れる

<launch> <node name="usb_cam" pkg="usb_cam" type="usb_cam_node" output="screen" > <param name="video_device" value="/dev/video0" /> <param name="camera_frame_id" value="usb_cam" /> </node> <node name="image_view" pkg="image_view" type="image_view" respawn="false" output="screen" required="true"> <remap from="image" to="/usb_cam/image_raw"/> <param name="autosize" value="true" /> </node> </launch>

[ WARN] [1519217872.252076062]: Camera calibration file /home/takuya-usb/.ros/camera_info/head_camera.yaml not found.

カメラキャリブレーション

参考: カメラ キャリブレーションとは - MATLAB & Simulink - MathWorks 日本

チェッカーボードをダウンロード

-

rosrun usb_cam usb_cam_node

rosrun camera_calibration cameracalibrator.py --size 8x6 --square 0.026 image:=/usb_cam/image_raw

キャリブデータの抽出

ビデオストリームの配布と購読

image_transport

image transportを用いると、イメージを圧縮して送ることにより、ビデオストリームを転送する際の帯域への圧迫を緩和することができる。

pythonはimage_transportをサポートしていないため、以下のようにrepublishを用いる。

- $ republish [in_transport] in:=<in_base_topic> [out_transport] out:=<out_base_topic>

cppでは#include <image_transport/image_transport.h>で使用可能

参考: http://mprg-robot.isc.chubu.ac.jp/image_transport/

image_transportでトピック配布

以下の2つのメソッドを提供する

ImageTransportのadvertise

ImageTransportのadvertiseCamera

image_transportのpublisherは単一トピック配布とは異なり、複数の独立したトピックを同時に配布することができる。

compressedで別のトピックで配布するなら、トピック名は<ベーストピック名>/compressedとなる。

このcompressedのような補助機能をサブトピックという。

$rosmsg show sensor_msgs/Image

std_msgs/Header header uint32 seq time stamp string frame_id uint32 height uint32 width string encoding uint8 is_bigendian uint32 step uint8[] data

image_transportが提供しているプラグイン

image_transport ("raw") - The default transport, sending sensor_msgs/Image through ROS.

compressed_image_transport ("compressed") - JPEG or PNG image compression.

theora_image_transport ("theora") - Streaming video using the Theora codec.

参考:

Theoraコーデックを使ってみた① [インストール] - It’s now or never

JPG・PNG・GIFの違いをまとめてみました。 | ギズモード・ジャパン

image_transportの使い方

image_transport/Tutorials/WritingNewTransport - ROS Wiki

購読する時はベーストピック名を使用する。

サブトピック名は以下のようにパラメータでセットする。

- rosparam set base_topic/image_transport [raw | compressed | theora]

キーフレームとは: キーフレームとは?Iフレーム・Pフレーム・Bフレームの違い【GOP】 | AviUtlの易しい使い方

参考:

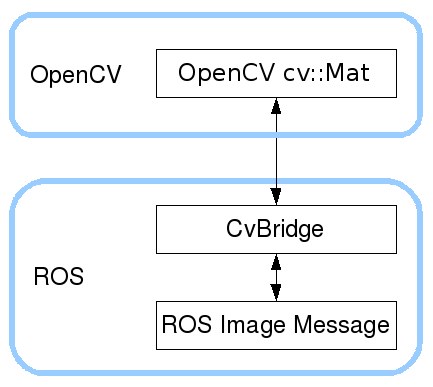

cv_bridge

ROSではOpenCVを簡単に使えるようなインターフェースcv_bridgeを提供している。

- #include <cv_bridge/cv_bridge.h>とすれば使用可能。

イメージ購読プログラム

#include <ros/ros.h> #include <image_transport/image_transport.h> #include <cv_bridge/cv_bridge.h> #include <opencv2/core.hpp> #include <opencv2/highgui.hpp> //#include <opencv2/highgui/highgui.hpp> void imageCallback(const sensor_msgs::ImageConstPtr& msg) { try { cv::imshow("Image_Subscriber", cv_bridge::toCvShare(msg, "bgr8")->image); cv::waitKey(30); } catch (cv_bridge::Exception& e) { ROS_ERROR("Could not convert from '%s' to 'bgr8'.", msg->encoding.c_str()); } } int main(int argc, char **argv) { ros::init(argc, argv, "image_subscriber"); ros::NodeHandle nh; cv::namedWindow("Image_Subscriber"); cv::startWindowThread(); image_transport::ImageTransport it(nh); image_transport::Subscriber sub = it.subscribe("camera_raw", 1, imageCallback); ros::spin(); cv::destroyWindow("camera_view"); return EXIT_SUCCESS; }

CMake

参考: ja/catkin/CMakeLists.txt - ROS Wiki

cmake_minimum_required(VERSION 2.8.3)

project(my_camera)

find_package(catkin REQUIRED COMPONENTS

roscpp

std_msgs

cv_bridge

image_transport

)

catkin_package(

CATKIN_DEPENDS roscpp std_msgs cv_bridge image_transport

)

find_package(OpenCV)

include_directories(

include

${catkin_INCLUDE_DIRS}

${OpenCV_INCLUDE_DIRS}

)

add_executable(image_sub src/image_subscriber.cpp)

target_link_libraries(image_sub

${catkin_LIBRARIES}

${OpenCV_LIBRARIES}

)

参考: ja/catkin/CMakeLists.txt - ROS Wiki

pakage.xml

<buildtool_depend>catkin</buildtool_depend> <build_depend>roscpp</build_depend> <build_depend>std_msgs</build_depend> <build_depend>image_transport</build_depend> <build_depend>cv_bridge</build_depend> <run_depend>roscpp</run_depend> <run_depend>std_msgs</run_depend> <run_depend>image_transport</run_depend> <run_depend>cv_bridge</run_depend>

イメージ配布プログラム

以下でカメラの各パラメータをチェック

- $ v4l2-ctl -d /dev/video0 --list-formats-ext

#include <ros/ros.h> #include <image_transport/image_transport.h> #include <cv_bridge/cv_bridge.h> #include <sensor_msgs/image_encodings.h> #include <opencv2/highgui/highgui.hpp> int main( int argc, char **argv ) { ros::init( argc, argv, "image_publisher" ); ros::NodeHandle nh; // Open camera with CAMERA_INDEX (webcam is typically #0). const int CAMERA_INDEX = 0; cv::VideoCapture capture( CAMERA_INDEX ); if( not capture.isOpened() ) { ROS_INFO_STREAM("Failed to open camera with index " << CAMERA_INDEX << "!"); ros::shutdown(); } // Set the parameters using the results of executing the following command: // v4l2-ctl -d /dev/video0 --list-formats-ext capture.set(CV_CAP_PROP_FRAME_WIDTH, 640); capture.set(CV_CAP_PROP_FRAME_HEIGHT, 480); capture.set(CV_CAP_PROP_FPS, 30); //double width = capture.get(CV_CAP_PROP_FRAME_WIDTH); //double height = capture.get(CV_CAP_PROP_FRAME_HEIGHT); //ROS_DEBUG_STREAM("width: " << width << ", height: " << height); image_transport::ImageTransport it(nh); image_transport::Publisher pub_image = it.advertise( "camera_raw", 1 ); // for debug cv::namedWindow("Image_Publisher", CV_WINDOW_AUTOSIZE | CV_WINDOW_FREERATIO); cv_bridge::CvImagePtr frame = boost::make_shared< cv_bridge::CvImage >(); frame->encoding = sensor_msgs::image_encodings::BGR8; while( ros::ok() ) { // for debug cv::Mat image; capture >> image; cv::imshow("Image_Publisher", image); // capture >> frame->image; if( frame->image.empty() ) { ROS_ERROR_STREAM( "Failed to capture frame!" ); ros::shutdown(); } frame->header.stamp = ros::Time::now(); pub_image.publish( frame->toImageMsg() ); if(cv::waitKey(30) >= 0) break; ros::spinOnce(); } capture.release(); return EXIT_SUCCESS; }

launch

<launch> <param name="pixel_format" value="mjpeg" /> <node name="image_publisher" pkg="my_camera" type="image_pub" output="screen"/> <node name="image_subscriber" pkg="my_camera" type="image_sub" output="screen"/> </launch>

$ rostopic list

/camera_raw /camera_raw/compressed /camera_raw/compressed/parameter_descriptions /camera_raw/compressed/parameter_updates /camera_raw/compressedDepth /camera_raw/compressedDepth/parameter_descriptions /camera_raw/compressedDepth/parameter_updates /camera_raw/theora /camera_raw/theora/parameter_descriptions /camera_raw/theora/parameter_updates /rosout /rosout_agg

カメライメージ配布プログラム

#include <ros/ros.h> #include <image_transport/image_transport.h> #include <cv_bridge/cv_bridge.h> #include <sensor_msgs/image_encodings.h> #include <opencv2/highgui/highgui.hpp> int main( int argc, char **argv ) { ros::init( argc, argv, "image_Camerapublisher" ); ros::NodeHandle n; // Open camera with CAMERA_INDEX (webcam is typically #0). const int CAMERA_INDEX = 0; cv::VideoCapture capture( CAMERA_INDEX ); if( not capture.isOpened() ) { ROS_INFO_STREAM("Failed to open camera with index " << CAMERA_INDEX << "!"); ros::shutdown(); } // Set the parameters using the results of executing the following command: // v4l2-ctl -d /dev/video0 --list-formats-ext capture.set(CV_CAP_PROP_FRAME_WIDTH, 640); capture.set(CV_CAP_PROP_FRAME_HEIGHT, 480); capture.set(CV_CAP_PROP_FPS, 30); image_transport::ImageTransport it( n ); image_transport::CameraPublisher pub_image = it.advertiseCamera( "camera_raw", 1 ); sensor_msgs::CameraInfo cam_info; // for debug //cv::namedWindow("Image_CameraPublisher", CV_WINDOW_AUTOSIZE | CV_WINDOW_FREERATIO); cv_bridge::CvImagePtr frame = boost::make_shared< cv_bridge::CvImage >(); frame->encoding = sensor_msgs::image_encodings::BGR8; while( ros::ok() ) { // for debug //cv::Mat image; //capture >> image; //cv::imshow("Image_CameraPublisher", image); // capture >> frame->image; if( frame->image.empty() ) { ROS_ERROR_STREAM( "Failed to capture frame!" ); ros::shutdown(); } frame->header.stamp = ros::Time::now(); cam_info.header.stamp = frame->header.stamp; pub_image.publish( frame->toImageMsg() , sensor_msgs::CameraInfoConstPtr(new sensor_msgs::CameraInfo(cam_info))); if(cv::waitKey(30) >= 0) break; ros::spinOnce(); } capture.release(); return EXIT_SUCCESS; }

カメライメージ購読プログラム

#include <ros/ros.h> #include <image_transport/image_transport.h> #include <opencv2/highgui/highgui.hpp> #include <cv_bridge/cv_bridge.h> void imageCallback(const sensor_msgs::ImageConstPtr& msg, const sensor_msgs::CameraInfoConstPtr& info) { try { cv::imshow("image_CameraSubscriber", cv_bridge::toCvShare(msg, "bgr8")->image); if(cv::waitKey(30) >= 0) ros::shutdown(); } catch (cv_bridge::Exception& e) { ROS_ERROR("Could not convert from '%s' to 'bgr8'.", msg->encoding.c_str()); } } int main(int argc, char **argv) { ros::init(argc, argv, "image_CameraSubscriber"); ros::NodeHandle nh; cv::namedWindow("image_CameraSubscriber"); cv::startWindowThread(); image_transport::ImageTransport it(nh); image_transport::CameraSubscriber sub; sub = it.subscribeCamera("camera_raw", 1, imageCallback); ros::spin(); cv::destroyWindow("image_CameraSubscriber"); return EXIT_SUCCESS; }

launch

<launch> <node name="camera_publisher" pkg="my_camera" type="camera_pub" output="screen"/> <node name="camera_subscriber" pkg="my_camera" type="camera_sub" required="true" output="screen"/> </launch>

$ rostopic list

/camera_info /camera_raw /camera_raw/compressed /camera_raw/compressed/parameter_descriptions /camera_raw/compressed/parameter_updates /camera_raw/compressedDepth /camera_raw/compressedDepth/parameter_descriptions /camera_raw/compressedDepth/parameter_updates /camera_raw/theora /camera_raw/theora/parameter_descriptions /camera_raw/theora/parameter_updates /rosout /rosout_agg