softkineticをros(kinetic)で動かしみる

softkinetic(ToFセンサ)を3つゲットしたので動かしてみる

softkineticのSDKのダウンロード

アカウント登録

SDKのダウンロード:https://www.softkinetic.com/language/fr-BE/Support/Download/EntryId/517

~/.bashrcにpathを追加:export LD_LIBRARY_PATH=/opt/softkinetic/DepthSenseSDK/lib/:$LD_LIBRARY_PATH

rosのsoftkineticのpackageをインストール&demoの実行

branchをkinetic-devに

ワークスペースを作ってcatkin_make

source devel/setup.bash

roslaunch softkinetic_camera softkinetic_camera_demo.launch

イエイ、動いた。

これを使ってPCLのお勉強とNewロボットを作ろう。

Error: No space left on device

これが出たら以下の記事を参考に対処

jsk_recognition/install_softkinetic_camera.rst at master · jsk-ros-pkg/jsk_recognition · GitHub

Softkinetic node StreamingException with senz3D - ROS Answers: Open Source Q&A Forum

ln -s /opt/softkinetic/DepthSenseSDK/lib/libDepthSensePlugins.so.1 /opt/softkinetic/DepthSenseSDK/lib/libDepthSensePlugins.so ln -s /opt/softkinetic/DepthSenseSDK/lib/libDepthSense.so.1 /opt/softkinetic/DepthSenseSDK/lib/libDepthSense.so

をしないと行けない可能性あり。

機械学習のお勉強(BoW)

教科書

映画のデータセットの取得

http://ai.stanford.edu/~amaas/data/sentiment/

import pyprind import pandas as pd import os # change the `basepath` to the directory of the # unzipped movie dataset #basepath = '/Users/Sebastian/Desktop/aclImdb/' basepath = './aclImdb' labels = {'pos': 1, 'neg': 0} pbar = pyprind.ProgBar(50000) df = pd.DataFrame() for s in ('test', 'train'): for l in ('pos', 'neg'): path = os.path.join(basepath, s, l) for file in os.listdir(path): with open(os.path.join(path, file), 'r') as infile: #, #encoding='utf-8') as infile: txt = infile.read() df = df.append([[txt, labels[l]]], ignore_index=True) pbar.update() df.columns = ['review', 'sentiment']

BoW

Bag of Words:

ドキュメントの集合全体から一意なトークンからなる語彙を作成する(一意なトークン(ソースコード中の文字の並び中の、1個ないし複数個の文字から成る文字列):単語etc...)

各ドキュメントでの各単語の出現回数を含んだ特徴ベクトルを構築する

特徴ベクトルの大半は0となる疎ベクトルになる

単語を特徴ベクトルに変換

import numpy as np from sklearn.feature_extraction.text import CountVectorizer count = CountVectorizer() docs = np.array([ 'The sun is shining', 'The weather is sweet', 'The sun is shining, the weather is sweet, and one and one is two']) bag = count.fit_transform(docs) print(count.vocabulary_)

結果

{u'and': 0, u'sun': 4, u'is': 1, u'two': 7, u'one': 2, u'weather': 8, u'sweet': 5, u'the': 6, u'shining': 3}

print(bag.toarray())

結果

[[0 1 0 1 1 0 1 0 0] [0 1 0 0 0 1 1 0 1] [2 3 2 1 1 1 2 1 1]]

1グラム:"the" , "sun" , "is"

2グラム:"the sun" , "sun is"

単語の関連性の評価

TF-IDF : Term Frequency-Inverse Document Frequency

tf-idf(t, d) = tf(t, d) x idf(t, d) tf:ドキュメントdにおける単語tの出現回数 idf = log (n_d) / (1 + df(t, d)) n_d: ドキュメントの総数 df : 単語tを含んでいるドキュメントdの個数

sk-learnでの実装

np.set_printoptions(precision=2) from sklearn.feature_extraction.text import TfidfTransformer tfidf = TfidfTransformer(use_idf=True, norm='l2', smooth_idf=True) print(tfidf.fit_transform(count.fit_transform(docs)).toarray())

結果

[[ 0. 0.43 0. 0.56 0.56 0. 0.43 0. 0. ] [ 0. 0.43 0. 0. 0. 0.56 0.43 0. 0.56] [ 0.5 0.45 0.5 0.19 0.19 0.19 0.3 0.25 0.19]]

"is"の重要度が下がっている

sk-learnではtf-idfの最後にL2正規化を行っている。

テキストデータのクレンジング

文章から顔文字以外の句読点を削除する

import re def preprocessor(text): text = re.sub('<[^>]*>', '', text) emoticons = re.findall('(?::|;|=)(?:-)?(?:\)|\(|D|P)', text) text = re.sub('[\W]+', ' ', text.lower()) +\ ' '.join(emoticons).replace('-', '') return text

正規表現に参考になるサイト: Python Regular Expressions | Google for Education | Google Developers

ドキュメントをトークン化

ワードステミング:単語を原型にする。関連する単語を同じ互換にマッピングできる。

from nltk.stem.porter import PorterStemmer porter = PorterStemmer() def tokenizer(text): return text.split() def tokenizer_porter(text): return [porter.stem(word) for word in text.split()] print tokenizer('runners like running and thus they run')] print tokenizer_porter('runners like running and thus they run')

結果

['runners', 'like', 'running', 'and', 'thus', 'they', 'run'] [u'runner', 'like', u'run', 'and', u'thu', 'they', 'run']

ストップワードの除去:ごくありふれた単語 (ex: is , and , has etc ...)

import nltk nltk.download('stopwords') from nltk.corpus import stopwords stop = stopwords.words('english') [w for w in tokenizer_porter('a runner likes running and runs a lot')[-10:] if w not in stop]

結果

['runner', u'like', u'run', u'run', 'lot']

ドキュメントを分類するロジスティック回帰モデルのトレーニング

肯定的、否定的に分類する

GridSearchCVオブジェクトを使ってロジスティック回帰モデルの最適なパラメータ集合を求める

X_train = df.loc[:25000, 'review'].values y_train = df.loc[:25000, 'sentiment'].values X_test = df.loc[25000:, 'review'].values y_test = df.loc[25000:, 'sentiment'].values from sklearn.pipeline import Pipeline from sklearn.linear_model import LogisticRegression from sklearn.feature_extraction.text import TfidfVectorizer if Version(sklearn_version) < '0.18': from sklearn.grid_search import GridSearchCV else: from sklearn.model_selection import GridSearchCV tfidf = TfidfVectorizer(strip_accents=None, lowercase=False, preprocessor=None) param_grid = [{'vect__ngram_range': [(1, 1)], 'vect__stop_words': [stop, None], 'vect__tokenizer': [tokenizer, tokenizer_porter], 'clf__penalty': ['l1', 'l2'], 'clf__C': [1.0, 10.0, 100.0]}, {'vect__ngram_range': [(1, 1)], 'vect__stop_words': [stop, None], 'vect__tokenizer': [tokenizer, tokenizer_porter], 'vect__use_idf':[False], 'vect__norm':[None], 'clf__penalty': ['l1', 'l2'], 'clf__C': [1.0, 10.0, 100.0]}, ] lr_tfidf = Pipeline([('vect', tfidf), ('clf', LogisticRegression(random_state=0))]) gs_lr_tfidf = GridSearchCV(lr_tfidf, param_grid, scoring='accuracy', cv=5, verbose=1, n_jobs=-1) gs_lr_tfidf.fit(X_train, y_train) print('Best parameter set: %s ' % gs_lr_tfidf.best_params_) print('CV Accuracy: %.3f' % gs_lr_tfidf.best_score_) clf = gs_lr_tfidf.best_estimator_ print('Test Accuracy: %.3f' % clf.score(X_test, y_test))

最適なパラメータセットは以下となる

Best parameter set: {'vect__tokenizer': <function tokenizer at 0x11851c6a8>, 'clf__C': 10.0, 'vect__stop_words': None, 'clf__penalty': 'l2', 'vect__ngram_range': (1, 1)}

正解率

CV Accuracy: 0.897 Test Accuracy: 0.899

この結果から90%の正解率が予測できることがわかる。

テキスト分類ではナイーブベイズ分類器が有名

オンラインアルゴリズムとアウトオブコア学習

ローカルドライブからドキュメントを直接ストリーミングし、ドキュメントの小さなミニバッチを使ってロジスティック回帰モデルをトレーニングする

トークンに分割 & ドキュメントをひとつずつ読み込んで返すジェネレータを作成

import numpy as np import re from nltk.corpus import stopwords def tokenizer(text): text = re.sub('<[^>]*>', '', text) emoticons = re.findall('(?::|;|=)(?:-)?(?:\)|\(|D|P)', text.lower()) text = re.sub('[\W]+', ' ', text.lower()) +\ ' '.join(emoticons).replace('-', '') tokenized = [w for w in text.split() if w not in stop] return tokenized def stream_docs(path): with open(path, 'r', encoding='utf-8') as csv: next(csv) # skip header for line in csv: text, label = line[:-3], int(line[-2]) yield text, label print next(stream_docs(path='./movie_data.csv'))

結果

('"In 1974, the teenager Martha Moxley (Maggie Grace) moves to the high-class area of Belle Haven, Greenwich, Connecticut. On the Mischief Night, eve of Halloween, she was murdered in the backyard of her house and her murder remained unsolved. Twenty-two years later, the writer Mark Fuhrman (Christopher Meloni), who is a former LA detective that has fallen in disgrace for perjury in O.J. Simpson trial and moved to Idaho, decides to investigate the case with his partner Stephen Weeks (Andrew Mitchell) with the purpose of writing a book. The locals squirm and do not welcome them, but with the support of the retired detective Steve Carroll (Robert Forster) that was in charge of the investigation in the 70\'s, they discover the criminal and a net of power and money to cover the murder.<br /><br />""Murder in Greenwich"" is a good TV movie, with the true story of a murder of a fifteen years old girl that was committed by a wealthy teenager whose mother was a Kennedy. The powerful and rich family used their influence to cover the murder for more than twenty years. However, a snoopy detective and convicted perjurer in disgrace was able to disclose how the hideous crime was committed. The screenplay shows the investigation of Mark and the last days of Martha in parallel, but there is a lack of the emotion in the dramatization. My vote is seven.<br /><br />Title (Brazil): Not Available"',

1)

get_minibatch関数を定義する

def get_minibatch(doc_stream, size):

docs, y = [], []

try:

for _ in range(size):

text, label = next(doc_stream)

docs.append(text)

y.append(label)

except StopIteration:

return None, None

return docs, y

アウトオブコア学習のためにハッシュトリックを利用

特徴量を2**21に設定。ロジスティック回帰分類器を初期化している。

from sklearn.feature_extraction.text import HashingVectorizer from sklearn.linear_model import SGDClassifier vect = HashingVectorizer(decode_error='ignore', n_features=2**21, preprocessor=None, tokenizer=tokenizer) clf = SGDClassifier(loss='log', random_state=1, n_iter=1) doc_stream = stream_docs(path='./movie_data.csv')

import pyprind pbar = pyprind.ProgBar(45) classes = np.array([0, 1]) for _ in range(45): X_train, y_train = get_minibatch(doc_stream, size=1000) if not X_train: break X_train = vect.transform(X_train) clf.partial_fit(X_train, y_train, classes=classes) pbar.update() X_test, y_test = get_minibatch(doc_stream, size=5000) X_test = vect.transform(X_test) print('Accuracy: %.3f' % clf.score(X_test, y_test))

結果

Accuracy: 0.867

正解率は僅かに下がるが額スユ時間が大幅に削減される

また、以下のように最後の5000個のドキュメントを使ってモデルを逐次的に更新できる

clf = clf.partial_fit(X_test, y_test)

Word2Vec

ニューラルネットワークに基づく教師なし学習アルゴリズムであり、単語間の関係を自動的に学習しようとする。

Google Code Archive - Long-term storage for Google Code Project Hosting.

機械学習のお勉強(アンサンブル学習)

- アンサンブル学習

- 多数決

- アンサンブルの誤分類率

- 多数決方式の分類アルゴリズムの構築(スタッキング)

- アンサンブル分類器の評価とチューニング

- ブーストラップ標本を用いた分類器アンサンブルの構築(バギング)

- アダブーストによる弱学習器

アンサンブル学習

様々な分類器をひとつのメタ分類器として組み合わせる。それによって高い汎化性能をもたらす。

多数決

y^ = mode{C1(x), C2(x),...,Cm(x)} (mode:最頻値)

C(x) = sign[ΣCj] = { 1 (ΣC > 0) or -1 (ΣC < 0)

アンサンブルの誤分類率

P:二項分布の確率質量関数

k:誤分類の個数

y:要素数

P(y>=k) = Σ nCk εk (1-ε)(n-k) = ε

from scipy.misc import comb import math def ensemble_error(n_classifier, error): k_start = int(math.ceil(n_classifier / 2.0)) # 引数の値以上の最小の整数を返す probs = [comb(n_classifier, k) * error**k * (1-error)**(n_classifier - k) for k in range(k_start, n_classifier + 1)] return sum(probs) ensemble_error(n_classifier=11, error=0.25)

結果

0.034327507019042969

ここの分類率error=0.25より誤分類の確率が低いことがわかる。

このベース分類率を0.0~1.0にふった時の誤分類率は以下のようになる。

import numpy as np error_range = np.arange(0.0, 1.01, 0.01) ens_errors = [ensemble_error(n_classifier=11, error=error) for error in error_range] import matplotlib.pyplot as plt plt.plot(error_range, ens_errors, label='Ensemble error', linewidth=2) plt.plot(error_range, error_range, linestyle='--', label='Base error', linewidth=2) plt.xlabel('Base error') plt.ylabel('Base/Ensemble error') plt.legend(loc='upper left') plt.grid() plt.tight_layout() # plt.savefig('./figures/ensemble_err.png', dpi=300) plt.show()

ベース分類器の誤分類率が0.5を下回っていれば、アンサンブル学習の誤分類率がそれよりも低くなることがわかる。

多数決分類器の実装

3つの分類器があって、それぞれの重みが0.2,0.2,0.6の場合

import numpy as np np.argmax(np.bincount([0, 0, 1], weights=[0.2, 0.2, 0.6]))

ひとつ目、ふたつ目の分類器が0とクラス分類しても、みっつ目の分類器が1と分類すれば、結果は1となる。

sk-learnではクラスラベルの確率が得られるので、その確率pに重みをつけて多数決を行うこともできる。

y^ = arg max Σ w * p

ex = np.array([[0.9, 0.1], [0.8, 0.2], [0.4, 0.6]]) p = np.average(ex, axis=0, weights=[0.2, 0.2, 0.6]) print p print np.argmax(p)

結果

array([ 0.58, 0.42]) 0

多数決方式の分類アルゴリズムの構築(スタッキング)

上記を組み合わせた実装

from sklearn.base import BaseEstimator from sklearn.base import ClassifierMixin from sklearn.preprocessing import LabelEncoder from sklearn.externals import six from sklearn.base import clone from sklearn.pipeline import _name_estimators import numpy as np import operator class MajorityVoteClassifier(BaseEstimator, ClassifierMixin): """ A majority vote ensemble classifier Parameters ---------- classifiers : array-like, shape = [n_classifiers] Different classifiers for the ensemble vote : str, {'classlabel', 'probability'} (default='label') If 'classlabel' the prediction is based on the argmax of class labels. Else if 'probability', the argmax of the sum of probabilities is used to predict the class label (recommended for calibrated classifiers). weights : array-like, shape = [n_classifiers], optional (default=None) If a list of `int` or `float` values are provided, the classifiers are weighted by importance; Uses uniform weights if `weights=None`. """ def __init__(self, classifiers, vote='classlabel', weights=None): self.classifiers = classifiers self.named_classifiers = {key: value for key, value in _name_estimators(classifiers)} self.vote = vote self.weights = weights def fit(self, X, y): """ Fit classifiers. Parameters ---------- X : {array-like, sparse matrix}, shape = [n_samples, n_features] Matrix of training samples. y : array-like, shape = [n_samples] Vector of target class labels. Returns ------- self : object """ if self.vote not in ('probability', 'classlabel'): raise ValueError("vote must be 'probability' or 'classlabel'" "; got (vote=%r)" % self.vote) if self.weights and len(self.weights) != len(self.classifiers): raise ValueError('Number of classifiers and weights must be equal' '; got %d weights, %d classifiers' % (len(self.weights), len(self.classifiers))) # Use LabelEncoder to ensure class labels start with 0, which # is important for np.argmax call in self.predict self.lablenc_ = LabelEncoder() self.lablenc_.fit(y) self.classes_ = self.lablenc_.classes_ self.classifiers_ = [] for clf in self.classifiers: fitted_clf = clone(clf).fit(X, self.lablenc_.transform(y)) self.classifiers_.append(fitted_clf) return self def predict(self, X): """ Predict class labels for X. Parameters ---------- X : {array-like, sparse matrix}, shape = [n_samples, n_features] Matrix of training samples. Returns ---------- maj_vote : array-like, shape = [n_samples] Predicted class labels. """ if self.vote == 'probability': maj_vote = np.argmax(self.predict_proba(X), axis=1) else: # 'classlabel' vote # Collect results from clf.predict calls predictions = np.asarray([clf.predict(X) for clf in self.classifiers_]).T maj_vote = np.apply_along_axis( lambda x: np.argmax(np.bincount(x, weights=self.weights)), axis=1, arr=predictions) maj_vote = self.lablenc_.inverse_transform(maj_vote) return maj_vote def predict_proba(self, X): """ Predict class probabilities for X. Parameters ---------- X : {array-like, sparse matrix}, shape = [n_samples, n_features] Training vectors, where n_samples is the number of samples and n_features is the number of features. Returns ---------- avg_proba : array-like, shape = [n_samples, n_classes] Weighted average probability for each class per sample. """ probas = np.asarray([clf.predict_proba(X) for clf in self.classifiers_]) avg_proba = np.average(probas, axis=0, weights=self.weights) return avg_proba def get_params(self, deep=True): """ Get classifier parameter names for GridSearch""" if not deep: return super(MajorityVoteClassifier, self).get_params(deep=False) else: out = self.named_classifiers.copy() for name, step in six.iteritems(self.named_classifiers): for key, value in six.iteritems(step.get_params(deep=True)): out['%s__%s' % (name, key)] = value return out

参考:Python の無名関数( lambda )の使い方 - Life with Python

データセットの準備

あやめのデータセット👇

from sklearn import datasets from sklearn.preprocessing import StandardScaler from sklearn.preprocessing import LabelEncoder if Version(sklearn_version) < '0.18': from sklearn.cross_validation import train_test_split else: from sklearn.model_selection import train_test_split iris = datasets.load_iris() X, y = iris.data[50:, [1, 2]], iris.target[50:] le = LabelEncoder() y = le.fit_transform(y) X_train, X_test, y_train, y_test =\ train_test_split(X, y, test_size=0.5, random_state=1)

ロジスティック回帰、決定機分類器、k近傍分類器でのそれぞれの結果

import numpy as np from sklearn.linear_model import LogisticRegression from sklearn.tree import DecisionTreeClassifier from sklearn.neighbors import KNeighborsClassifier from sklearn.pipeline import Pipeline if Version(sklearn_version) < '0.18': from sklearn.cross_validation import cross_val_score else: from sklearn.model_selection import cross_val_score clf1 = LogisticRegression(penalty='l2', C=0.001, random_state=0) clf2 = DecisionTreeClassifier(max_depth=1, criterion='entropy', random_state=0) clf3 = KNeighborsClassifier(n_neighbors=1, p=2, metric='minkowski') pipe1 = Pipeline([['sc', StandardScaler()], ['clf', clf1]]) pipe3 = Pipeline([['sc', StandardScaler()], ['clf', clf3]]) clf_labels = ['Logistic Regression', 'Decision Tree', 'KNN'] print('10-fold cross validation:\n') for clf, label in zip([pipe1, clf2, pipe3], clf_labels): scores = cross_val_score(estimator=clf, X=X_train, y=y_train, cv=10, scoring='roc_auc') print("ROC AUC: %0.2f (+/- %0.2f) [%s]" % (scores.mean(), scores.std(), label))

結果

10-fold cross validation: ROC AUC: 0.92 (+/- 0.20) [Logistic Regression] ROC AUC: 0.92 (+/- 0.15) [Decision Tree] ROC AUC: 0.93 (+/- 0.10) [KNN]

アンサンブル学習の結果

# Majority Rule (hard) Voting mv_clf = MajorityVoteClassifier(classifiers=[pipe1, clf2, pipe3]) clf_labels += ['Majority Voting'] all_clf = [pipe1, clf2, pipe3, mv_clf] for clf, label in zip(all_clf, clf_labels): scores = cross_val_score(estimator=clf, X=X_train, y=y_train, cv=10, scoring='roc_auc') print("ROC AUC: %0.2f (+/- %0.2f) [%s]" % (scores.mean(), scores.std(), label))

結果

ROC AUC: 0.92 (+/- 0.20) [Logistic Regression] ROC AUC: 0.92 (+/- 0.15) [Decision Tree] ROC AUC: 0.93 (+/- 0.10) [KNN] ROC AUC: 0.97 (+/- 0.10) [Majority Voting]

ここからアンサンブル学習の結果が良いことがわかる

アンサンブル分類器の評価とチューニング

from sklearn.metrics import roc_curve from sklearn.metrics import auc colors = ['black', 'orange', 'blue', 'green'] linestyles = [':', '--', '-.', '-'] for clf, label, clr, ls \ in zip(all_clf, clf_labels, colors, linestyles): # assuming the label of the positive class is 1 y_pred = clf.fit(X_train, y_train).predict_proba(X_test)[:, 1] fpr, tpr, thresholds = roc_curve(y_true=y_test, y_score=y_pred) roc_auc = auc(x=fpr, y=tpr) plt.plot(fpr, tpr, color=clr, linestyle=ls, label='%s (auc = %0.2f)' % (label, roc_auc)) plt.legend(loc='lower right') plt.plot([0, 1], [0, 1], linestyle='--', color='gray', linewidth=2) plt.xlim([-0.1, 1.1]) plt.ylim([-0.1, 1.1]) plt.grid() plt.xlabel('False Positive Rate') plt.ylabel('True Positive Rate') # plt.tight_layout() # plt.savefig('./figures/roc.png', dpi=300) plt.show()

結果

決定領域の確認

sc = StandardScaler() X_train_std = sc.fit_transform(X_train) from itertools import product all_clf = [pipe1, clf2, pipe3, mv_clf] x_min = X_train_std[:, 0].min() - 1 x_max = X_train_std[:, 0].max() + 1 y_min = X_train_std[:, 1].min() - 1 y_max = X_train_std[:, 1].max() + 1 xx, yy = np.meshgrid(np.arange(x_min, x_max, 0.1), np.arange(y_min, y_max, 0.1)) f, axarr = plt.subplots(nrows=2, ncols=2, sharex='col', sharey='row', figsize=(7, 5)) for idx, clf, tt in zip(product([0, 1], [0, 1]), all_clf, clf_labels): clf.fit(X_train_std, y_train) Z = clf.predict(np.c_[xx.ravel(), yy.ravel()]) Z = Z.reshape(xx.shape) axarr[idx[0], idx[1]].contourf(xx, yy, Z, alpha=0.3) axarr[idx[0], idx[1]].scatter(X_train_std[y_train==0, 0], X_train_std[y_train==0, 1], c='blue', marker='^', s=50) axarr[idx[0], idx[1]].scatter(X_train_std[y_train==1, 0], X_train_std[y_train==1, 1], c='red', marker='o', s=50) axarr[idx[0], idx[1]].set_title(tt) plt.text(-3.5, -4.5, s='Sepal width [standardized]', ha='center', va='center', fontsize=12) plt.text(-10.5, 4.5, s='Petal length [standardized]', ha='center', va='center', fontsize=12, rotation=90) plt.tight_layout() # plt.savefig('./figures/voting_panel', bbox_inches='tight', dpi=300) plt.show()

結果

これをみるにそれぞれの決定領域を掛けあわせたような結果となっている

パラメータのチューニング

mv_clf.get_params()

結果

{'decisiontreeclassifier': DecisionTreeClassifier(class_weight=None, criterion='entropy', max_depth=1,

max_features=None, max_leaf_nodes=None,

min_impurity_decrease=0.0, min_impurity_split=None,

min_samples_leaf=1, min_samples_split=2,

min_weight_fraction_leaf=0.0, presort=False, random_state=0,

splitter='best'),

'decisiontreeclassifier__class_weight': None,

'decisiontreeclassifier__criterion': 'entropy',

'decisiontreeclassifier__max_depth': 1,

'decisiontreeclassifier__max_features': None,

'decisiontreeclassifier__max_leaf_nodes': None,

'decisiontreeclassifier__min_impurity_decrease': 0.0,

'decisiontreeclassifier__min_impurity_split': None,

'decisiontreeclassifier__min_samples_leaf': 1,

'decisiontreeclassifier__min_samples_split': 2,

'decisiontreeclassifier__min_weight_fraction_leaf': 0.0,

'decisiontreeclassifier__presort': False,

'decisiontreeclassifier__random_state': 0,

'decisiontreeclassifier__splitter': 'best',

'pipeline-1': Pipeline(memory=None,

steps=[('sc', StandardScaler(copy=True, with_mean=True, with_std=True)), ['clf', LogisticRegression(C=0.001, class_weight=None, dual=False, fit_intercept=True,

intercept_scaling=1, max_iter=100, multi_class='ovr', n_jobs=1,

penalty='l2', random_state=0, solver='liblinear', tol=0.0001,

verbose=0, warm_start=False)]]),

'pipeline-1__clf': LogisticRegression(C=0.001, class_weight=None, dual=False, fit_intercept=True,

intercept_scaling=1, max_iter=100, multi_class='ovr', n_jobs=1,

penalty='l2', random_state=0, solver='liblinear', tol=0.0001,

verbose=0, warm_start=False),

'pipeline-1__clf__C': 0.001,

'pipeline-1__clf__class_weight': None,

'pipeline-1__clf__dual': False,

'pipeline-1__clf__fit_intercept': True,

'pipeline-1__clf__intercept_scaling': 1,

'pipeline-1__clf__max_iter': 100,

'pipeline-1__clf__multi_class': 'ovr',

'pipeline-1__clf__n_jobs': 1,

'pipeline-1__clf__penalty': 'l2',

'pipeline-1__clf__random_state': 0,

'pipeline-1__clf__solver': 'liblinear',

'pipeline-1__clf__tol': 0.0001,

'pipeline-1__clf__verbose': 0,

'pipeline-1__clf__warm_start': False,

'pipeline-1__memory': None,

'pipeline-1__sc': StandardScaler(copy=True, with_mean=True, with_std=True),

'pipeline-1__sc__copy': True,

'pipeline-1__sc__with_mean': True,

'pipeline-1__sc__with_std': True,

'pipeline-1__steps': [('sc',

StandardScaler(copy=True, with_mean=True, with_std=True)),

['clf',

LogisticRegression(C=0.001, class_weight=None, dual=False, fit_intercept=True,

intercept_scaling=1, max_iter=100, multi_class='ovr', n_jobs=1,

penalty='l2', random_state=0, solver='liblinear', tol=0.0001,

verbose=0, warm_start=False)]],

'pipeline-2': Pipeline(memory=None,

steps=[('sc', StandardScaler(copy=True, with_mean=True, with_std=True)), ['clf', KNeighborsClassifier(algorithm='auto', leaf_size=30, metric='minkowski',

metric_params=None, n_jobs=1, n_neighbors=1, p=2,

weights='uniform')]]),

'pipeline-2__clf': KNeighborsClassifier(algorithm='auto', leaf_size=30, metric='minkowski',

metric_params=None, n_jobs=1, n_neighbors=1, p=2,

weights='uniform'),

'pipeline-2__clf__algorithm': 'auto',

'pipeline-2__clf__leaf_size': 30,

'pipeline-2__clf__metric': 'minkowski',

'pipeline-2__clf__metric_params': None,

'pipeline-2__clf__n_jobs': 1,

'pipeline-2__clf__n_neighbors': 1,

'pipeline-2__clf__p': 2,

'pipeline-2__clf__weights': 'uniform',

'pipeline-2__memory': None,

'pipeline-2__sc': StandardScaler(copy=True, with_mean=True, with_std=True),

'pipeline-2__sc__copy': True,

'pipeline-2__sc__with_mean': True,

'pipeline-2__sc__with_std': True,

'pipeline-2__steps': [('sc',

StandardScaler(copy=True, with_mean=True, with_std=True)),

['clf',

KNeighborsClassifier(algorithm='auto', leaf_size=30, metric='minkowski',

metric_params=None, n_jobs=1, n_neighbors=1, p=2,

weights='uniform')]]}

get_paramsメソッドは、キーがパラメータ名、バリューがパラメータ値とするディクショナリを返している。

パラメータをふって結果をみてみる

if Version(sklearn_version) < '0.18': from sklearn.cross_validation import GridSearchCV else: from sklearn.model_selection import GridSearchCV params = {'decisiontreeclassifier__max_depth': [1, 2], 'pipeline-1__clf__C': [0.001, 0.1, 100.0]} grid = GridSearchCV(estimator=mv_clf, param_grid=params, cv=10, scoring='roc_auc') grid.fit(X_train, y_train) if Version(sklearn_version) < '0.18': for params, mean_score, scores in grid.grid_scores_: print("%0.3f +/- %0.2f %r" % (mean_score, scores.std() / 2.0, params)) else: cv_keys = ('mean_test_score', 'std_test_score','params') for r, _ in enumerate(grid.cv_results_['mean_test_score']): print("%0.3f +/- %0.2f %r" % (grid.cv_results_[cv_keys[0]][r], grid.cv_results_[cv_keys[1]][r] / 2.0, grid.cv_results_[cv_keys[2]][r])) print('Best parameters: %s' % grid.best_params_) print('Accuracy: %.2f' % grid.best_score_)

結果

0.967 +/- 0.05 {'pipeline-1__clf__C': 0.001, 'decisiontreeclassifier__max_depth': 1}

0.967 +/- 0.05 {'pipeline-1__clf__C': 0.1, 'decisiontreeclassifier__max_depth': 1}

1.000 +/- 0.00 {'pipeline-1__clf__C': 100.0, 'decisiontreeclassifier__max_depth': 1}

0.967 +/- 0.05 {'pipeline-1__clf__C': 0.001, 'decisiontreeclassifier__max_depth': 2}

0.967 +/- 0.05 {'pipeline-1__clf__C': 0.1, 'decisiontreeclassifier__max_depth': 2}

1.000 +/- 0.00 {'pipeline-1__clf__C': 100.0, 'decisiontreeclassifier__max_depth': 2}

Best parameters: {'pipeline-1__clf__C': 100.0, 'decisiontreeclassifier__max_depth': 1}

Accuracy: 1.00

ブーストラップ標本を用いた分類器アンサンブルの構築(バギング)

具体例

バギングの効果の確認を行う

データセットの準備

import pandas as pd df_wine = pd.read_csv('https://archive.ics.uci.edu/ml/' 'machine-learning-databases/wine/wine.data', header=None) df_wine.columns = ['Class label', 'Alcohol', 'Malic acid', 'Ash', 'Alcalinity of ash', 'Magnesium', 'Total phenols', 'Flavanoids', 'Nonflavanoid phenols', 'Proanthocyanins', 'Color intensity', 'Hue', 'OD280/OD315 of diluted wines', 'Proline'] # drop 1 class df_wine = df_wine[df_wine['Class label'] != 1] y = df_wine['Class label'].values X = df_wine[['Alcohol', 'Hue']].values from sklearn.preprocessing import LabelEncoder if Version(sklearn_version) < '0.18': from sklearn.cross_validation import train_test_split else: from sklearn.model_selection import train_test_split le = LabelEncoder() y = le.fit_transform(y) X_train, X_test, y_train, y_test =\ train_test_split(X, y, test_size=0.40, random_state=1)

バギングの実装

from sklearn.ensemble import BaggingClassifier from sklearn.tree import DecisionTreeClassifier tree = DecisionTreeClassifier(criterion='entropy', max_depth=None, random_state=1) bag = BaggingClassifier(base_estimator=tree, n_estimators=500, max_samples=1.0, max_features=1.0, bootstrap=True, bootstrap_features=False, n_jobs=1, random_state=1)

決定木とバギングの比較

from sklearn.metrics import accuracy_score tree = tree.fit(X_train, y_train) y_train_pred = tree.predict(X_train) y_test_pred = tree.predict(X_test) tree_train = accuracy_score(y_train, y_train_pred) tree_test = accuracy_score(y_test, y_test_pred) print('Decision tree train/test accuracies %.3f/%.3f' % (tree_train, tree_test)) bag = bag.fit(X_train, y_train) y_train_pred = bag.predict(X_train) y_test_pred = bag.predict(X_test) bag_train = accuracy_score(y_train, y_train_pred) bag_test = accuracy_score(y_test, y_test_pred) print('Bagging train/test accuracies %.3f/%.3f' % (bag_train, bag_test))

結果

Decision tree train/test accuracies 1.000/0.833 Bagging train/test accuracies 1.000/0.896

決定境界の可視化

import numpy as np import matplotlib.pyplot as plt x_min = X_train[:, 0].min() - 1 x_max = X_train[:, 0].max() + 1 y_min = X_train[:, 1].min() - 1 y_max = X_train[:, 1].max() + 1 xx, yy = np.meshgrid(np.arange(x_min, x_max, 0.1), np.arange(y_min, y_max, 0.1)) f, axarr = plt.subplots(nrows=1, ncols=2, sharex='col', sharey='row', figsize=(8, 3)) for idx, clf, tt in zip([0, 1], [tree, bag], ['Decision Tree', 'Bagging']): clf.fit(X_train, y_train) Z = clf.predict(np.c_[xx.ravel(), yy.ravel()]) Z = Z.reshape(xx.shape) axarr[idx].contourf(xx, yy, Z, alpha=0.3) axarr[idx].scatter(X_train[y_train == 0, 0], X_train[y_train == 0, 1], c='blue', marker='^') axarr[idx].scatter(X_train[y_train == 1, 0], X_train[y_train == 1, 1], c='red', marker='o') axarr[idx].set_title(tt) axarr[0].set_ylabel('Alcohol', fontsize=12) plt.text(10.2, -1.2, s='Hue', ha='center', va='center', fontsize=12) plt.tight_layout() # plt.savefig('./figures/bagging_region.png', # dpi=300, # bbox_inches='tight') plt.show()

アダブーストによる弱学習器

ブースティングの原型版

トレーニングデータセットDからトレーニングサンプルのランダムなサブセットd1を非復元抽出し、弱学習器C1をトレーニングする

2つ目のランダムなトレーニングサブセットd2をトレーニングデータセットから非復元抽出し、以前にご分類されたサンプルの50%を追加して、弱学習C2をトレーニングする

トレーニングデータセットDからC1とC2の結果が異なるトレーニングサンプルd3を洗い出し、3つ目の学習器C3をトレーニングする

弱学習器C1,C2,C3を多数決により組み合わせる

アダブースト

Ensembles (4): AdaBoost - YouTube

トレーニングサンプルの重みベクトルwを設定する

全体でm回実行するブースティングのうちj回目のステップで3~8を繰り返す

重み付けされた弱学習器をトレーニング

クラスラベルの予測

重み付けされた誤分類率を計算

重みの更新に用いる係数の計算

重みの更新

重みを正規化して合計が1になるようにする

入力された特徴行列に対する最終予測を計算する

決定木とアダブーストの比較

from sklearn.ensemble import AdaBoostClassifier tree = DecisionTreeClassifier(criterion='entropy', max_depth=1, random_state=0) ada = AdaBoostClassifier(base_estimator=tree, n_estimators=500, learning_rate=0.1, random_state=0) tree = tree.fit(X_train, y_train) y_train_pred = tree.predict(X_train) y_test_pred = tree.predict(X_test) tree_train = accuracy_score(y_train, y_train_pred) tree_test = accuracy_score(y_test, y_test_pred) print('Decision tree train/test accuracies %.3f/%.3f' % (tree_train, tree_test)) ada = ada.fit(X_train, y_train) y_train_pred = ada.predict(X_train) y_test_pred = ada.predict(X_test) ada_train = accuracy_score(y_train, y_train_pred) ada_test = accuracy_score(y_test, y_test_pred) print('AdaBoost train/test accuracies %.3f/%.3f' % (ada_train, ada_test))

結果

Decision tree train/test accuracies 0.845/0.854 AdaBoost train/test accuracies 1.000/0.875

テストデータセットの性能が向上しているが、バリアンスは大きくなっている。

決定領域の確認

x_min, x_max = X_train[:, 0].min() - 1, X_train[:, 0].max() + 1 y_min, y_max = X_train[:, 1].min() - 1, X_train[:, 1].max() + 1 xx, yy = np.meshgrid(np.arange(x_min, x_max, 0.1), np.arange(y_min, y_max, 0.1)) f, axarr = plt.subplots(1, 2, sharex='col', sharey='row', figsize=(8, 3)) for idx, clf, tt in zip([0, 1], [tree, ada], ['Decision Tree', 'AdaBoost']): clf.fit(X_train, y_train) Z = clf.predict(np.c_[xx.ravel(), yy.ravel()]) Z = Z.reshape(xx.shape) axarr[idx].contourf(xx, yy, Z, alpha=0.3) axarr[idx].scatter(X_train[y_train == 0, 0], X_train[y_train == 0, 1], c='blue', marker='^') axarr[idx].scatter(X_train[y_train == 1, 0], X_train[y_train == 1, 1], c='red', marker='o') axarr[idx].set_title(tt) axarr[0].set_ylabel('Alcohol', fontsize=12) plt.text(10.2, -1.2, s='Hue', ha='center', va='center', fontsize=12) plt.tight_layout() # plt.savefig('./figures/adaboost_region.png', # dpi=300, # bbox_inches='tight') plt.show()

ROS x LeapMotionのお勉強

やりたいこと

ROSでLeap Motionを使ってみる

教科書

ライセンス

<maintainer email="fei@kanto-gakuin.ac.jp">Fei Qian</maintainer> <license>BSD</license> <author>Fei Qian</author>

準備

SDKのダウンロード V2 Tracking — Leap Motion Developer

64bitバージョン install

sudo dpkg --install Leap-2.3.1+31549-x64.deb

ubuntu16.04でもし動かなければ Ubuntu 16.04にLeapMotion導入 - Qiita を参考に修正

- leapを起動したい時

sudo service leapd start

- 止めたい時

sudo service leapd stop

- 状態の確認がしたい時

sudo service leapd status

- 設定

LeapControlPanel --showsettings

- Visualizer

Visualizer

パスの設定

export PYTHONPATH=$PYTHONPATH:$LEAP_SDK/lib:$LEAP_SDK/lib/x64 export LEAP_SDK=~/ros_ws/book/LeapDeveloperKit_2.3.1+31549_linux/LeapSDK

ROSで起動

cppの場合は自作する必要あり

以下を参考に動かせる

C++で実装

├── CMakeLists.txt

└── leap_teleop

├── CMakeLists.txt

├── launch

│ └── leap_turtlesim.launch

├── msg

│ └── leap_motion.msg

├── package.xml

└── src

├── leap_motion_publisher.cpp

├── leap_motion_subscriber.cpp

├── leap_turtle_teleop.cpp

msg

Header header uint32 hand_id geometry_msgs/Vector3 direction geometry_msgs/Vector3 normal geometry_msgs/Vector3 velocity geometry_msgs/Vector3 palmpos geometry_msgs/Vector3 ypr

実装配布

Listenerクラスを継承して、一部の必要なメソッドをオーバーライド

#include <Leap.h> #include <ros/ros.h> #include <leap_teleop/leap_motion.h> using namespace Leap; class HandsListener : public Listener { public: ros::NodeHandle nh; ros::Publisher pub; /* * Called once, when this Listener object is newly added to a Controller. */ virtual void onInit(const Controller&); /* * Called when the Controller object connects to the Leap Motion software * and the Leap Motion hardware device is plugged in, or when this Listener * object is added to a Controller that is already connected. */ virtual void onConnect(const Controller&); /* * Called when the Controller object disconnects from the Leap Motion software * or the Leap Motion hardware is unplugged. */ virtual void onDisconnect(const Controller&){ROS_DEBUG("Disconnected");}; /* * Called when this Listener object is removed from the Controller or the * Controller instance is destroyed. */ virtual void onExit(const Controller&){ROS_DEBUG("Exited");}; /* * Called when a new frame of hand and finger tracking data is available. */ virtual void onFrame(const Controller&); /* * Called when this application becomes the foreground application. */ virtual void onFocusGained(const Controller&) {ROS_DEBUG("Focus Gained");}; /* * Called when this application loses the foreground focus. */ virtual void onFocusLost(const Controller&){ROS_DEBUG("Focus Lost");}; /* * Called when a Leap Motion controller plugged in, unplugged, or the device changes state. */ virtual void onDeviceChange(const Controller&){ROS_DEBUG("Device Changed");}; /* * Called when the Leap Motion daemon/service connects to your application Controller. */ virtual void onServiceConnect(const Controller&){ROS_DEBUG("Service Connected");}; /* * Called if the Leap Motion daemon/service disconnects from your application Controller. */ virtual void onServiceDisconnect(const Controller&){ROS_DEBUG("Service Disconnected");}; private: }; void HandsListener::onInit(const Controller& controller) { std::cout << "Initialized" <<std::endl; pub = nh.advertise<leap_teleop::leap_motion>("hands_motion", 1); } void HandsListener::onConnect(const Controller& controller) { std::cout << "Connected" << std::endl; controller.enableGesture(Gesture::TYPE_CIRCLE); controller.enableGesture(Gesture::TYPE_KEY_TAP); controller.enableGesture(Gesture::TYPE_SCREEN_TAP); controller.enableGesture(Gesture::TYPE_SWIPE); } void HandsListener::onFrame(const Controller& controller) { // Get the most recent frame and report some basic information const Frame frame = controller.frame(); leap_teleop::leap_motion msg; msg.header.frame_id = "leap_motion_pub"; msg.header.stamp = ros::Time::now(); HandList hands = frame.hands(); const Hand hand = hands[0]; Vector normal = hand.palmNormal(); Vector direction = hand.direction(); Vector velocity = hand.palmVelocity(); Vector position = hand.palmPosition(); msg.hand_id = hand.id(); msg.direction.x = direction[0]; msg.direction.y = direction[1]; msg.direction.z = direction[2]; msg.normal.x = normal[0]; msg.normal.y = normal[1]; msg.normal.z = normal[2]; msg.velocity.x = velocity[0]; msg.velocity.y = velocity[1]; msg.velocity.z = velocity[2]; msg.palmpos.x = position[0]; msg.palmpos.y = position[1]; msg.palmpos.z = position[2]; msg.ypr.x = direction.pitch() * RAD_TO_DEG ; msg.ypr.y = normal.roll() * RAD_TO_DEG; msg.ypr.z = direction.yaw() * RAD_TO_DEG; std::cout << " Hand ID:" << hand.id() << std::endl; std::cout << " PalmPosition:" << hand.palmPosition() << std::endl; std::cout << " PalmVelocity:" << hand.palmVelocity() << std::endl; std::cout << " PalmNormal:" << hand.palmNormal() << std::endl; std::cout << "PalmDirection:" << hand.direction() << std::endl; std::cout << " pitch:" << msg.ypr.x << std::endl; std::cout << " roll:" << msg.ypr.y << std::endl; std::cout << " yaw:" << msg.ypr.z << std::endl; std::cout << "--------------" << std::endl; pub.publish(msg); } int main(int argc, char** argv) { ros::init(argc, argv, "leap_motion_publisher"); // Create a sample listener and controller HandsListener listener; Controller controller; // Have the sample listener receive events from the controller controller.addListener(listener); controller.setPolicyFlags(static_cast<Leap::Controller::PolicyFlag> (Leap::Controller::POLICY_IMAGES)); ros::spin(); // Remove the sample listener when done controller.removeListener(listener); return 0; }

onInitメソッドは、addListenerでリスナーを追加したときに呼び出される。

onFrameはフレームデータが更新されたときに呼び出されるコールバックで、メッセージにカプセル化し、配布。

PolicyFlageなどは Controller — Leap Motion C++ SDK v3.2 documentation 参照

実装購読

//#include <Leap.h> #include <ros/ros.h> #include <leap_teleop/leap_motion.h> void callback(const leap_teleop::leap_motion::ConstPtr& msg) { std::cout << "frame id: " << msg->header.frame_id << std::endl; std::cout << "tiemstamp: " << msg->header.stamp << std::endl; std::cout << "seq: " << msg->header.seq << std::endl; std::cout << "hand id: " << msg->hand_id << std::endl; std::cout << "direction: \n" << msg->direction << std::endl; std::cout << "normal: \n" << msg->normal << std::endl; std::cout << "velocity: \n" << msg->velocity << std::endl; std::cout << "palmpos: \n" << msg->palmpos << std::endl; std::cout << "ypr: \n" << msg->ypr << std::endl; std::cout << "--------------" << std::endl; } int main(int argc, char** argv) { ros::init(argc, argv, "leap_motion_subscriber"); ros::NodeHandle nh; ros::Subscriber sub = nh.subscribe<leap_teleop::leap_motion>( "/hands_motion", 10, callback); ros::spin(); return 0; }

CMakeList

cmake_minimum_required(VERSION 2.8.3)

project(leap_teleop)

find_package(catkin REQUIRED COMPONENTS

roscpp

rospy

std_msgs

message_generation

tf

cv_bridge

image_transport

)

add_message_files(

FILES

leap_motion.msg

)

generate_messages(DEPENDENCIES std_msgs geometry_msgs sensor_msgs)

catkin_package(

CATKIN_DEPENDS roscpp rospy std_msgs message_runtime

)

include_directories(include

${catkin_INCLUDE_DIRS}

if($ENV{LEAP_SDK} $ENV{LEAP_SDK}/include)

)

if(DEFINED ENV{LEAP_SDK})

add_executable(leap_pub src/leap_motion_publisher.cpp)

target_link_libraries(leap_pub ${catkin_LIBRARIES}

${Boost_LIBRARIES}

${catkin_LIBRARIES} $ENV{LEAP_SDK}/lib/x64/libLeap.so

)

add_dependencies(leap_pub leap_teleop_gencpp) # ビルド時の順番の指定!?

add_executable(leap_sub src/leap_motion_subscriber.cpp)

target_link_libraries(leap_sub ${catkin_LIBRARIES}

${Boost_LIBRARIES}

${catkin_LIBRARIES} $ENV{LEAP_SDK}/lib/x64/libLeap.so

)

add_dependencies(leap_sub leap_teleop_gencpp)

add_executable(leap_turtle_teleop src/leap_turtle_teleop.cpp)

target_link_libraries(leap_turtle_teleop ${catkin_LIBRARIES}

${Boost_LIBRARIES}

${catkin_LIBRARIES} $ENV{LEAP_SDK}/lib/x64/libLeap.so

)

add_dependencies(leap_turtle_teleop leap_teleop_gencpp)

install(TARGETS leap_pub leap_sub leap_turtle_teleop

RUNTIME DESTINATION ${CATKIN_PACKAGE_BIN_DESTINATION}

)

endif()

package.xml

<buildtool_depend>catkin</buildtool_depend> <build_depend>camera_calibration_parsers</build_depend> <build_depend>camera_info_manager</build_depend> <build_depend>geometry_msgs</build_depend> <build_depend>image_transport</build_depend> <build_depend>message_generation</build_depend> <build_depend>roscpp</build_depend> <build_depend>roslib</build_depend> <build_depend>rospack</build_depend> <build_depend>rospy</build_depend> <build_depend>std_msgs</build_depend> <build_depend>visualization_msgs</build_depend> <run_depend>camera_calibration_parsers</run_depend> <run_depend>camera_info_manager</run_depend> <run_depend>geometry_msgs</run_depend> <run_depend>image_transport</run_depend> <run_depend>message_runtime</run_depend> <run_depend>roscpp</run_depend> <run_depend>roslib</run_depend> <run_depend>rospack</run_depend> <run_depend>rospy</run_depend> <run_depend>std_msgs</run_depend> <run_depend>visualization_msgs</run_depend>

実装亀制御

<launch> <!-- Turtlesim Node --> <node pkg="turtlesim" type="turtlesim_node" name="sim"/> <!-- leapmotion node --> <node pkg="leap_teleop" type="leap_pub" name="leap_motion" /> <node pkg="leap_teleop" type="leap_turtle_teleop" name="leap_teleop" launch-prefix="xterm -font r14 -bg darkblue -geometry 113x30+503+80 -e" required="true" /> </launch>

//#include <Leap.h> #include <ros/ros.h> #include <geometry_msgs/Twist.h> #include <sensor_msgs/Joy.h> #include <leap_teleop/leap_motion.h> class LeapTurtle { public: LeapTurtle(); private: void leapCallback(const leap_teleop::leap_motion::ConstPtr& msg); ros::NodeHandle nh; ros::Publisher pub; ros::Subscriber sub; }; LeapTurtle::LeapTurtle() { sub = nh.subscribe<leap_teleop::leap_motion>( "/hands_motion", 10, &LeapTurtle::leapCallback, this); pub = nh.advertise<geometry_msgs::Twist>("turtle1/cmd_vel", 1); } void LeapTurtle::leapCallback(const leap_teleop::leap_motion::ConstPtr& msg) { std::cout << "frame id: " << msg->header.frame_id << std::endl; std::cout << "tiemstamp: " << msg->header.stamp << std::endl; std::cout << "seq: " << msg->header.seq << std::endl; std::cout << "hand id: " << msg->hand_id << std::endl; std::cout << "direction: \n" << msg->direction << std::endl; std::cout << "normal: \n" << msg->normal << std::endl; std::cout << "velocity: \n" << msg->velocity << std::endl; std::cout << "palmpos: \n" << msg->palmpos << std::endl; std::cout << "ypr: \n" << msg->ypr << std::endl; std::cout << "--------------" << std::endl; geometry_msgs::Twist twist; twist.linear.x = -0.15*msg->ypr.x; twist.angular.z = 0.15*msg->ypr.y; ROS_INFO_STREAM("(" << msg->ypr.x << " " << msg->ypr.y << ")"); pub.publish(twist); } int main(int argc, char** argv) { ros::init(argc, argv, "leap_turtle_teleop"); LeapTurtle leap_turtle; ros::spin(); return 0; }

ステレオカメラとして動かしたい!

しかし、下記エラーで動かず。。。原因調査中。

[ERROR] [1510999568.734786707]: Skipping XML Document "/opt/ros/kinetic/share/gmapping/nodelet_plugins.xml" which had no Root Element. This likely means the XML is malformed or missing.

このissueが原因

のソースからオーバーレイで解決

leap motion公式HPの説明↓

ROS x C++のお勉強

背景

PCLや画像処理の高速化などC++でしか扱えないものを使えるようになりたい。

ROSパッケージの構成検討

最小構成(hello world)

CMakeLists.txt

cmake_minimum_required(VERSION 2.8.3)

project(hello)

find_package(catkin REQUIRED COMPONENTS

roscpp

std_msgs

)

catkin_package(

# INCLUDE_DIRS include

# LIBRARIES chapter03

# CATKIN_DEPENDS roscpp std_msgs

# DEPENDS system_lib

)

include_directories(

# include

${catkin_INCLUDE_DIRS}

)

add_executable(hello_world src/hello_world.cpp)

target_link_libraries(hello_world ${catkin_LIBRARIES})

package.xml

<?xml version="1.0"?> <package format="2"> <name>hello</name> <version>0.0.0</version> <description>The hello package</description> <maintainer email="mouse@todo.todo">mouse</maintainer> <license>TODO</license> <buildtool_depend>catkin</buildtool_depend> <build_depend>roscpp</build_depend> <build_depend>std_msgs</build_depend> <build_export_depend>roscpp</build_export_depend> <build_export_depend>std_msgs</build_export_depend> <exec_depend>roscpp</exec_depend> <exec_depend>std_msgs</exec_depend> </package>

hello_world.cpp

#include <ros/ros.h> int main(int argc, char **argv) { ros::init(argc, argv, "hello_world"); ros::NodeHandle nh; ros::Rate rate(1); while(ros::ok()){ ROS_INFO_STREAM("Hello ROS world !!!"); rate.sleep(); } return 0; }

ImageTransport

image_transport/Tutorials - ROS Wiki

cv_bridge/Tutorials/UsingCvBridgeToConvertBetweenROSImagesAndOpenCVImages - ROS Wiki

subscriber

#include <ros/ros.h> #include <image_transport/image_transport.h> #include <opencv2/highgui/highgui.hpp> #include <cv_bridge/cv_bridge.h> void imageCallback(const sensor_msgs::ImageConstPtr& msg) { try { cv::imshow("ImageSubscriber", cv_bridge::toCvShare(msg, "bgr8")->image); if(cv::waitKey(30) >= 0) ros::shutdown(); } catch (cv_bridge::Exception& e) { ROS_ERROR("Could not convert from '%s' to 'bgr8'.", msg->encoding.c_str()); } } int main(int argc, char **argv) { ros::init(argc, argv, "camera_subscriber"); ros::NodeHandle nh; cv::namedWindow("Image_Subscriber"); cv::startWindowThread(); image_transport::ImageTransport it(nh); image_transport::Subscriber sub; sub = it.subscribe("/usb_cam/image_raw", 1,imageCallback); ros::spin(); cv::destroyWindow("camera_Subscriber"); return EXIT_SUCCESS; }

camera subscriber カメラのInfo情報が追加

#include <ros/ros.h> #include <image_transport/image_transport.h> #include <opencv2/highgui/highgui.hpp> #include <cv_bridge/cv_bridge.h> void imageCallback(const sensor_msgs::ImageConstPtr& msg, const sensor_msgs::CameraInfoConstPtr& info) { try { cv::imshow("ImageCameraSubscriber", cv_bridge::toCvShare(msg, "bgr8")->image); if(cv::waitKey(30) >= 0) ros::shutdown(); } catch (cv_bridge::Exception& e) { ROS_ERROR("Could not convert from '%s' to 'bgr8'.", msg->encoding.c_str()); } } int main(int argc, char **argv) { ros::init(argc, argv, "camera_camerasubscriber"); ros::NodeHandle nh; cv::namedWindow("Image_CameraSubscriber"); cv::startWindowThread(); image_transport::ImageTransport it(nh); image_transport::Subscriber sub = it.subscribe("/usb_cam/image_raw", 1,imageCallback); ros::spin(); cv::destroyWindow("camera_Subscriber"); return EXIT_SUCCESS; }

参考:

image_transport/Tutorials/SubscribingToImages - ROS Wiki

PCL

TODO

DockerでDeepLearningの環境を作ってみる

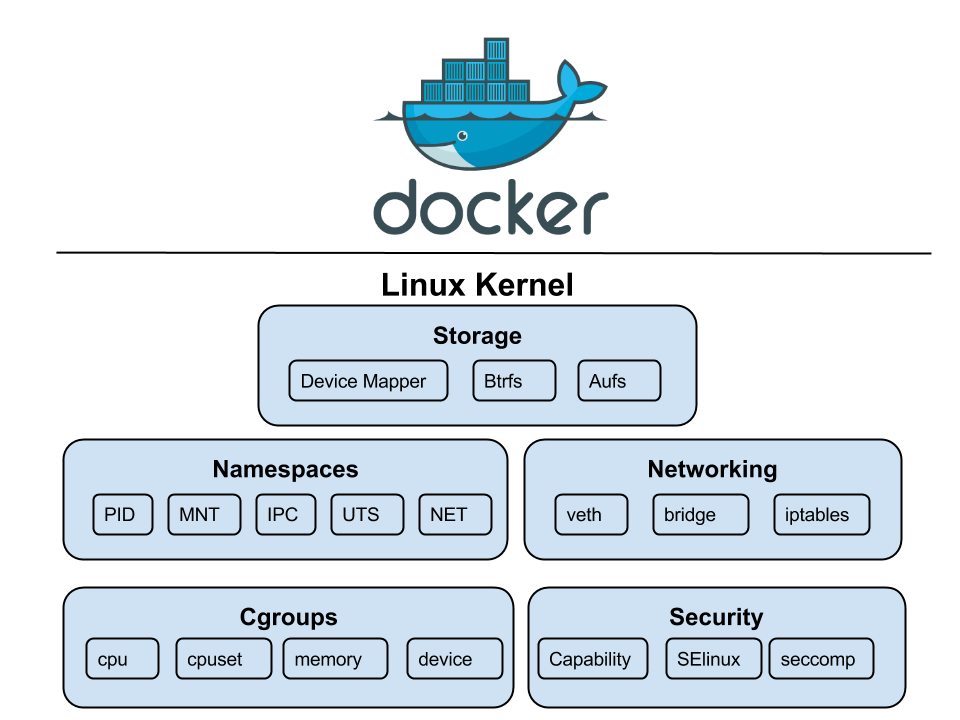

Dockerを支えるLinuxカーネル

参考:

Etsukata blog: Docker を支える Linux Kernel の機能 (概要編)

Docker内部で利用されているLinuxカーネルの機能 (namespace/cgroups) - Qiita

Linuxカーネル Docker関連 namespaceのメモ « Rest Term

NVIDIA-DOCKER

Internals · NVIDIA/nvidia-docker Wiki · GitHub

Motivation · NVIDIA/nvidia-docker Wiki · GitHub

Motivation · NVIDIA/nvidia-docker Wiki · GitHub

環境構築

nvidia-docker でポータブルな機械学習の作業環境を作る - Qiita

が今回の教科書。わかりやすい説明ありがとうございます。

手順

dockerインストール:Get Docker CE for Ubuntu | Docker Documentation

nvidia-dockerインストール:GitHub - NVIDIA/nvidia-docker: Build and run Docker containers leveraging NVIDIA GPUs

sudo なしで docker を使えるように:Dockerコマンドをsudoなしで実行する方法 - Qiita

Dockerfileの作成(作業環境の初期状態の設計図)

imageの作成(作業環境の初期状態)

containerの作成とログイン( image を元に新しい作業環境を作る。)

ワークフロー

初回

Dockerfile 作成

nvidia-docker build ... でイメージ作成。失敗したら goto 1

nvidia-docker run ... でコンテナ作成&ログイン コンテナ環境を確認してexitしてコンテナ停止

コンテナ内作業&ホストでの作業

docker start ... でコンテナを起動してログイン

screen|tmux を起動

コンテナ内で何か作業する

ctrl-p + ctrl-q で一旦ホストへ戻る

ホストで何か作業する

docker attach ... でコンテナ内へ戻る。goto 2

作業完了したら exit でコンテナを停止する

作業再開したければ goto 1

Dockerfile

参考:

kerasのDockerfile 趣味でディープラーニングするための GPU 環境を安上がりに作る方法 - Qiita

chainerのDockerfile nvdia-docker で ディープラーニング用の環境を作る – GitHub 出張所 – プログラム関係のブログはここに

例1:Dockerfile

nvidia-docker/Dockerfile at master · NVIDIA/nvidia-docker · GitHub

FROM nvidia/cuda:8.0-devel-ubuntu16.04 CMD nvidia-smi -q

例2:Dockerfile

FROM nvidia/cuda:8.0-cudnn6-devel-ubuntu16.04

ENV DEBIAN_FRONTEND "noninteractive"

RUN apt-get update

RUN apt-get -y \

-o Dpkg::Options::="--force-confdef" \

-o Dpkg::Options::="--force-confold" dist-upgrade

RUN apt-get install -y --no-install-recommends \

sudo ssh \

zsh screen cmake unzip git curl wget vim tree htop \

python-dev python-pip python-setuptools \

python3-dev python3-pip python3-setuptools \

build-essential \

graphviz \

libatlas-base-dev libopenblas-base libopenblas-dev liblapack-dev \

build-essential \

libopencv-dev \

python-numpy \

protobuf-compiler

RUN groupadd -g 1942 ubuntu

RUN useradd -m -u 1942 -g 1942 -d /home/ubuntu ubuntu

RUN echo "ubuntu ALL=(ALL) NOPASSWD:ALL" >> /etc/sudoers

RUN chown -R ubuntu:ubuntu /home/ubuntu

RUN chsh -s /usr/bin/zsh ubuntu

USER ubuntu

WORKDIR /home/ubuntu

ENV HOME /home/ubuntu

#### zsh ####

RUN bash -c "$(curl -fsSL https://raw.githubusercontent.com/robbyrussell/oh-my-zsh/master/tools/install.sh)"

#### zsh path setting ####

RUN echo 'export PATH=/usr/local/cuda/bin:$PATH' >> /home/ubuntu/.zshrc

RUN echo 'export LD_LIBRARY_PATH=/usr/local/cuda/lib64:$LD_LIBRARY_PATH' >> /home/ubuntu/.zshrc

RUN echo 'export LD_LIBRARY_PATH=/usr/local/nvidia/lib64:$LD_LIBRARY_PATH' >> /home/ubuntu/.zshrc

RUN echo 'export CPATH=/usr/local/cuda-8.0/targets/x86_64-linux/include:$CPATH' >> /home/ubuntu/.zshrc

RUN echo 'export CPATH=$HOME/cuda/include:$CPATH' >> /home/ubuntu/.zshrc

RUN echo 'export LIBRARY_PATH=$HOME/cuda/lib64:$LIBRARY_PATH' >> /home/ubuntu/.zshrc

RUN echo 'export LD_LIBRARY_PATH=$HOME/cuda/lib64:$LD_LIBRARY_PATH' >> /home/ubuntu/.zshrc

RUN echo 'export LC_ALL=C.UTF-8' >> /home/ubuntu/.zshrc

RUN echo 'export LANG=C.UTF-8' >> /home/ubuntu/.zshrc

RUN echo 'shell "/usr/bin/zsh"' >> /home/ubuntu/.screenrc

#### pyenv install ####

RUN git clone https://github.com/yyuu/pyenv.git /home/ubuntu/.pyenv

RUN echo 'export PYENV_ROOT="$HOME/.pyenv"' >> /home/ubuntu/.zshrc

RUN echo 'export PATH="$PYENV_ROOT/bin:$PATH"' >> /home/ubuntu/.zshrc

RUN echo 'eval "$(pyenv init -)"' >> /home/ubuntu/.zshrc

ENV PYENV_ROOT $HOME/.pyenv

ENV PATH $PYENV_ROOT/shims:$PYENV_ROOT/bin:$PATH

RUN eval "$(pyenv init -)"

#### anaconda ####

RUN pyenv install anaconda3-4.2.0

RUN pyenv rehash

RUN pyenv global anaconda3-4.2.0

RUN conda update --all

RUN pip install --upgrade pip

#### tensorflow ####

RUN pip install cython

RUN pip install tensorflow-gpu

RUN pip install keras

WORKDIR $HOME/

例3:Dockerfile フレームワークたくさん入り 深層学習全部入りコンテナ(Keras/TensorFlow/Chainer/Pytorch/Open AI Gym/Anaconda)で、nividia-dockerを使う - Qiita

FROM nvidia/cuda:8.0-cudnn6-runtime RUN apt-get update RUN apt-get install -y curl git unzip imagemagick bzip2 RUN git clone git://github.com/yyuu/pyenv.git .pyenv WORKDIR / ENV HOME / ENV PYENV_ROOT /.pyenv ENV PATH $PYENV_ROOT/shims:$PYENV_ROOT/bin:$PATH RUN pyenv install anaconda3-4.4.0 RUN pyenv global anaconda3-4.4.0 RUN pyenv rehash RUN pip install opencv-python tqdm h5py keras tensorflow-gpu kaggle-cli gym RUN pip install chainer RUN pip install http://download.pytorch.org/whl/cu80/torch-0.2.0.post3-cp36-cp36m-manylinux1_x86_64.whl RUN pip install torchvision EOF

imageの作成

chainerのイメージ:https://hub.docker.com/r/chainer/chainer/

pytorchのイメージ:GitHub - pytorch/pytorch: Tensors and Dynamic neural networks in Python with strong GPU acceleration

PyTorchのDockerイメージを作成してみた | SoraLab / ソララボ

https://hub.docker.com/search/?isAutomated=0&isOfficial=0&page=1&pullCount=0&q=pytorch&starCount=0

containerの作成とログイン

例1

nvidia-docker run -it docker-gpu-test-workspace

例2

nvidia-docker run \

--name my-workspace \

-v /data/:/data/ \

--net="host" \

-ti \

docker-gpu-test-workspace\

/usr/bin/zsh

test sample

例1:

(container)# apt install wget (container)# wget https://raw.githubusercontent.com/fchollet/keras/master/examples/mnist_cnn.py (container)# python3 mnist_cnn.py

結果

Using TensorFlow backend. 2017-11-12 08:16:25.823619: I tensorflow/core/platform/cpu_feature_guard.cc:137] Your CPU supports instructions that this TensorFlow binary was not compiled to use: SSE4.1 SSE4.2 AVX AVX2 FMA 2017-11-12 08:16:25.912916: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:892] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero 2017-11-12 08:16:25.913187: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1030] Found device 0 with properties: name: GeForce GTX 950M major: 5 minor: 0 memoryClockRate(GHz): 0.928 pciBusID: 0000:01:00.0 totalMemory: 1.96GiB freeMemory: 1.61GiB 2017-11-12 08:16:25.913216: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1120] Creating TensorFlow device (/device:GPU:0) -> (device: 0, name: GeForce GTX 950M, pci bus id: 0000:01:00.0, compute capability: 5.0) Downloading data from https://s3.amazonaws.com/img-datasets/mnist.npz 11493376/11490434 [==============================] - 16s 1us/step x_train shape: (60000, 28, 28, 1) 60000 train samples 10000 test samples Train on 60000 samples, validate on 10000 samples Epoch 1/12 2017-11-12 08:16:43.096840: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1120] Creating TensorFlow device (/device:GPU:0) -> (device: 0, name: GeForce GTX 950M, pci bus id: 0000:01:00.0, compute capability: 5.0) 60000/60000 [==============================] - 135s 2ms/step - loss: 0.3366 - acc: 0.8971 - val_loss: 0.0830 - val_acc: 0.9757 Epoch 2/12 60000/60000 [==============================] - 19s 316us/step - loss: 0.1126 - acc: 0.9666 - val_loss: 0.0527 - val_acc: 0.9831 Epoch 3/12 60000/60000 [==============================] - 18s 308us/step - loss: 0.0841 - acc: 0.9747 - val_loss: 0.0414 - val_acc: 0.9860 Epoch 4/12 60000/60000 [==============================] - 19s 310us/step - loss: 0.0695 - acc: 0.9792 - val_loss: 0.0402 - val_acc: 0.9864 Epoch 5/12 60000/60000 [==============================] - 18s 307us/step - loss: 0.0606 - acc: 0.9821 - val_loss: 0.0347 - val_acc: 0.9885 Epoch 6/12 60000/60000 [==============================] - 19s 314us/step - loss: 0.0548 - acc: 0.9842 - val_loss: 0.0319 - val_acc: 0.9897 Epoch 7/12 60000/60000 [==============================] - 19s 309us/step - loss: 0.0513 - acc: 0.9849 - val_loss: 0.0317 - val_acc: 0.9895 Epoch 8/12 60000/60000 [==============================] - 19s 310us/step - loss: 0.0473 - acc: 0.9861 - val_loss: 0.0319 - val_acc: 0.9892 Epoch 9/12 60000/60000 [==============================] - 19s 310us/step - loss: 0.0423 - acc: 0.9872 - val_loss: 0.0290 - val_acc: 0.9907 Epoch 10/12 60000/60000 [==============================] - 18s 307us/step - loss: 0.0411 - acc: 0.9878 - val_loss: 0.0299 - val_acc: 0.9904 Epoch 11/12 60000/60000 [==============================] - 18s 304us/step - loss: 0.0383 - acc: 0.9887 - val_loss: 0.0294 - val_acc: 0.9902 Epoch 12/12 60000/60000 [==============================] - 18s 306us/step - loss: 0.0361 - acc: 0.9887 - val_loss: 0.0354 - val_acc: 0.9890 Test loss: 0.035408690984 Test accuracy: 0.989

うごけばおけ

ためになる参考

nvidia-docker - AdwaysEngineersBlog - Adwaysエンジニアブログ

PyTorchのDockerイメージを作成してみた | SoraLab / ソララボ

PyTorch の Dockerfile に Opencv もインストールして使えるようにする - Qiita

深層学習全部入りコンテナ(Keras/TensorFlow/Chainer/Pytorch/Open AI Gym/Anaconda)で、nividia-dockerを使う - Qiita

threadのお勉強(with python)

ROS x thread x pythonのコードを読みながらthreadの理解、実装方法を学ぶ

python並列化

threading.Thread

multiprocessing.Process

Pythonのマルチスレッド処理:threading, multiprocessing | UX MILK

python x threadについて

Pythonのthreading.Threadとmultiprocessing.Process [ pLog ] 👈イイね!

Python の threading.Lock を試してみる | CUBE SUGAR STORAGE

pythonのcopyについて

8.10. copy — 浅いコピーおよび深いコピー操作 — Python 3.5.3 ドキュメント

https://teratail.com/questions/40811

pythonの値渡し、参照渡しについて

Pythonの値渡しと参照渡し - amacbee's blog

copy – オブジェクトのコピー - Python Module of the Week

pythonのselfについて

和訳 なぜPythonのメソッド引数に明示的にselfと書くのか | TRIVIAL TECHNOLOGIES 4 @ats のイクメン日記

ROS x thread x pythonのコード

jsk_robot/OdometryFeedbackWrapper.py at master · jsk-ros-pkg/jsk_robot · GitHub

self.lock = threading.Lock() ~ def source_odom_callback(self, msg): with self.lock: ~

のようにlockを用いてthread safeを実現

def feedback_odom_callback(self, msg): if not self.odom: return self.feedback_odom = msg with self.lock: # check distribution accuracy nearest_odom = copy.deepcopy(self.odom) nearest_dt = (self.feedback_odom.header.stamp - self.odom.header.stamp).to_sec()

lockした状態のself.odomの中身を深いcopyでnearest_odomに格納。

参考:Python の threading.Lock を試してみる | CUBE SUGAR STORAGE

dynamic_reconfigure/server.py at master · ros/dynamic_reconfigure · GitHub

mutex: 排他制御 http://wa3.i-3-i.info/word13360.html

上記と同じ。lockを使用。

ドローン画像取得 (Erle-Copter, ArduPilot) - Qiita

from threading import Thread, Lock VERBOSE=True import copy mutex = Lock() topic_name = "/camera/image/compressed" class web_video_server: def __init__(self): '''Initialize ros publisher, ros subscriber''' # subscribed Topic self.subscriber = rospy.Subscriber(topic_name, CompressedImage, self.callback, queue_size = 1) if VERBOSE : print "subscribed to " + topic_name def getCompressedImage(self): mutex.acquire(1) result = copy.deepcopy(self.np_arr); mutex.release() return result; def callback(self, ros_data): '''Callback function of subscribed topic. ''' mutex.acquire(1) self.np_arr = ros_data.data; mutex.release()

acquireとreleaseを使用。上記の with self.lock: でも良い。

lockした状態のself.np_arrの中身を深いcopyでresultに格納。

画像をgetするとき、callbackで更新するときそれぞれlockしている。

robot_blockly/image_server.py at master · erlerobot/robot_blockly · GitHub

http://files.cnblogs.com/files/cv-pr/ros_by_example_vol2_indigo.pdf

全体参考

Pythonで学ぶ 基礎からのプログラミング入門 (33) マルチスレッド処理を理解しよう(後編) | マイナビニュース